Serverless anywhere with Kubernetes

KEDA (Kubernetes-based Event-Driven Autoscaling) is an opensource project built by Microsoft in collaboration with Red Hat, which provides eve

This is part of a journey I started with modernizing governance, security and cost control systems

I've been using Azure Functions for years now, and I can say that the deployments that I've done have been almost everywhere and used all types of plans, SKUs, and so on, and I always tend to go for the more complex deployments where you have complete control over the solution.

Let's take an example of a simple consumption plan of an Azure Function. You pay as you go, it scales based on your load, and it's almost zero hassle. Sounds good, right? Well, not so much; you deal with very long cold starts, debugging is brutal towards very hard, and scaling differs from what you would like.

So, what's the solution to that? You pay more, migrate towards Azure Functions Premium plans, which cost more, allow you to select a more granular scalability plan, and don't deal with cold starts anymore if you're willing to pay for it. You get better performance and debugging power as the function is more responsive. With that, you can even add host multiple functions under a single plan to save costs; however, you have to be very careful that they should be as stateless as possible otherwise, you'll have issues. Other solutions? Sure, dedicated plans allow you to run Functions in App Services, and then you pay the regular app service monthly cost, and that's it. Performance is there as you're willing to pay for it; no more pay-as-you-go costs based on function execution and better debugging.

I focus so much on debugging because when you're working with complex "machinery" and it works on your machine, it gets a bit frustrating.

For example, I deployed v2 in the dev environment, and I know I broke something in the search for something better. Still, when I tried to find out very fast how it works, I hit the wall of blank screens, Application Insights matrix type text of issues, and no output from the function as it's dead.

Why did it not work on my machine in the Consumption, Premium, or App Service environment? Because in the search for something better, I used more resources than allocated in those environments. Is it an Azure fault? Function's fault? No, my fault; I wanted to find an OOM Killed event that failed to start because you're asking for more than you're paying for. Something. But nada, blank screen.

After some time of doing this back-and-forth process, I got quickly to the point where "it runs on my machine" had to stop. I've written about KEDA, Functions in Containers, UBI Images, and so on, but I never got the time to commit production code toward that effort.

At this point, I told myself I must put my money where my mouth is and start doing what I say.

Let's do a TLDR of Azure Functions.

They allow you to build trigger-based APIs, whether HTTP requests, queue messages, cronjobs, or event grid events.

I need a platform for:

What hits those checkboxes? Kubernetes. Azure Kubernetes Service for the win.

So, we're going to run Functions in Kubernetes. How would I handle the cluster, configuration, security, and the rest before I get to deploy the code? Terraform and K8s configuration management from Azure DevOps Pipeline.

My requirement for all of this is to copy-paste. I want to copy-paste a folder, modify some values and focus on the code, not the infrastructure and deployment context, so let's get started.

I need to replicate the PaaS goodness that Azure Function and App Service plans provide but with extra power and control. Taking from the above, I will also need the following:

Those needs are easily met with CSI Secret Provider, Workload Identity, and KEDA.

Your functions need to be containerized, so the go-to place to start is Docker Hub Azure Functions by Microsoft | Docker Hub TLDR, copy-paste, and it works. Sort of, meaning that if they are HTTP functions, you need to install and configure nginx as an ingress.

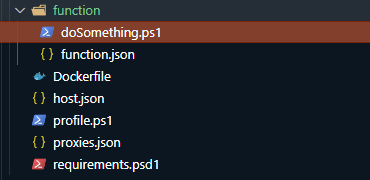

A function folder looks something like the image below and the Dockerfile as well. From here you can add multiple functions or keep it by one.

FROM mcr.microsoft.com/azure-functions/powershell:4.13.0-powershell7.2-slim

ENV AzureWebJobsScriptRoot=/home/site/wwwroot \

AzureFunctionsJobHost__Logging__Console__IsEnabled=true

COPY . /home/site/wwwrootI'm a PowerShell guy, so my choice of code is PowerShell. However, I write Python and C# if needed. Using the container approach, I can split everything into microservices without worrying about dependencies, missing out on features, or brain-twisting problems solved efficiently by a library.

Tip: Don't use the requirements file to install modules. Create a base image with the PowerShell modules you need, setup a scheduled pipeline to build the image, and copy the modules from the base image inside the ./modules folder. This will speed up Function loading time from n minutes to an instant.

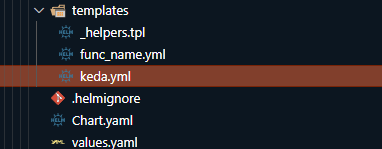

Now that everything is built and stored in an Azure Container Registry, it's time for the hard part. Helm charts.

Why Helm charts? Well, this is the modernization part. When you're CI/CD pipeline is deploying your code to the PaaS flavor of Functions, it builds and deploys. With Helm chart, you control every aspect of the situation to get the best of all worlds.

For example:

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ .Release.Name }}-<func_name>

{{ include "function.namespace" . | indent 2 }}

labels:

app: {{ .Release.Name }}-<func_name>

spec:

selector:

matchLabels:

app: {{ .Release.Name }}-<func_name>

template:

metadata:

labels:

app: {{ .Release.Name }}-<func_name>

spec:

{{- if eq .Values.<func_name>.azIdentity true }}

serviceAccountName: {{ .Values.<func_name>.serviceAccount.name }}

{{- end }}

containers:

- name: {{ .Release.Name }}-<func_name>

image: "{{ .Values.<func_name>.image.repository }}:{{ .Values.<func_name>.image.tag | default .Chart.AppVersion }}"

imagePullPolicy: {{ .Values.<func_name>.image.pullPolicy }}

{{- if eq .Values.<func_name>.azIdentity true }}

livenessProbe:

{{- toYaml .Values.azuretokenCheck.livenessProbe | nindent 12 }}

{{- end }}

ports:

- containerPort: 80

resources:

{{- toYaml .Values.<func_name>.resources | nindent 12 }}

volumeMounts:

- name: secrets-store

mountPath: '/mnt/secrets-store'

readOnly: true

env:

- name: APPINSIGHTS_INSTRUMENTATIONKEY

valueFrom:

secretKeyRef:

name: {{ .Values.kvSecretName }}

key: {{ .Values.appInsightsKey }}

- name: SERVICEBUS_CONNECTION_STRING

valueFrom:

secretKeyRef:

name: {{ .Values.kvSecretName }}

key: {{ .Values.serviceBusConnectionString }}

volumes:

- name: secrets-store

csi:

driver: secrets-store.csi.k8s.io

readOnly: true

volumeAttributes:

secretProviderClass: 'kv-azure-sync'

The helm chart shown above shows a deployment for a service bus function that pulls an Application Insights key and a service bus connection string from an Azure Key Vault using CSI Secret Store Provider; it authenticates to Azure using Workload Identity using the service account annotation, and by connecting to Azure using the Federation Token and has a liveness probe that checks if the token is good or not otherwise it will restart it.

The values file it's referencing looks like this:

azuretokenCheck:

livenessProbe:

exec:

command:

- sh

- '-c'

- >-

if [ -f /var/run/secrets/azure/tokens/azure-identity-token ];

then

echo "Azure Token exist."

else

echo "Azure Token does not exist."

exit 1

fi

initialDelaySeconds: 10

periodSeconds: 5

func_name:

image:

repository: acrimage.azurecr.io/image:latest

pullPolicy: Always

tag: 'latest'

hpa:

minReplicaCount: 1

maxReplicaCount: 5

imagePullSecrets: []

nameOverride: ''

fullnameOverride: ''

azIdentity: true

serviceAccount:

name: 'workload-identity'

resources:

requests:

memory: '64Mi'

cpu: '10m'

limits:

memory: '512Mi'

cpu: '250m'The scale object for KEDA Looks like this:

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: {{ .Release.Name }}-func_name

labels: {}

{{ include "function.namespace" . | indent 2 }}

spec:

scaleTargetRef:

name: {{ .Release.Name }}-func_name

minReplicaCount: {{ .Values.func_name.hpa.minReplicaCount }}

maxReplicaCount: {{ .Values.func_name.hpa.maxReplicaCount }}

triggers:

- type: azure-servicebus

metadata:

direction: in

queueName: message-queue

connectionFromEnv: SERVICEBUS_CONNECTION_STRINGThe object is quite simple; it looks into the values file for how many replicas it should have all the time and, based on a Service Bus metric, how much it should scale. Scaling is done by adding more replicas in the deployment, and as Kubernetes caches images locally, it's blazing fast when you get a storm of messages.

Now wrap it up and create a helm chart folder.

Now all that remains is to test and create a CI/CD pipeline that deploys the function inside the cluster.

The hardest part of this modernization is creating the helm chart because even with --dry-run and --debug mode, you still encounter many errors, and there's much trial and error. Still, it's very satisfying once you've managed to get it right.

The method is simple; you write some PowerShell, which iterates through the folders and builds and pushes the images for you.

- task: AzureCLI@2

displayName: Build and push function container images

inputs:

azureSubscription: ${{ parameters.serviceConnection }}

scriptType: "pscore"

scriptLocation: "inlineScript"

inlineScript: |

$folderPaths = Get-ChildItem folder_path -Directory -Exclude Modules

az acr login -n images.azurecr.io

foreach ($folder in $folderPaths)

{

$image = "$($($folder.name).ToLower())"

docker build "$($folder.FullName)" -t images.azurecr.io:image_repos/"$image":latest -t images.azurecr.io:image_repos/"$image":$(Build.BuildId)

docker push images.azurecr.io:image_repos/"$image":latest

docker push images.azurecr.io:image_repos/"$image":$(Build.BuildId)

}Snippet from an AzDo Pipeline

The deployment part is even more straightforward, and you connect to the Kubernetes cluster with then run the helm upgrade command.

- task: AzureCLI@2

displayName: "Deploy Functions Helm Chart"

inputs:

azureSubscription: ${{ parameters.serviceConnection }}

scriptType: "bash"

scriptLocation: "inlineScript"

workingDirectory: "$(Pipeline.Workspace)/helm/functions"

inlineScript: |

helm upgrade functions . -n default --atomic --create-namespace --installSnippet from an AZDo Pipeline

I try to keep this post short, but it gets longer and longer as I'm writing it and cutting out some bulk.

Once the pipeline is done and it's tested, all that remains is to run it and go for a pizza as a reward because the result is a fully-fledged system that allows me to focus on code while everything else is handled automagically.

Whenever I need to create a new function, I make the folder with the function name (copy-paste, rename) and the helm file containing the information for that function (copy-paste) and add values in the values.yaml file (copy-paste, slight modify) and commit.

Did I satisfy my copy-paste needs? I would say yes, but there's more work to do for it to be "perfect" I still need to migrate other things to Kubernetes, but the start is there.

Doing something like this will have a cost impact in the short term, but once you get everything in it, it will even out. The are other solutions like Azure Container Apps, which I will talk about soon, and they have their place; however, they don't offer me the level of control I want.

At the time of writing, the cost is higher than the cost of the Premium Function plans, but there's a lot more room for additions without increasing cost.

I hope you learned something new and exciting. This was a stimulating challenge, and I hope this post will give you the right ideas and snippets on how to get to this point without much hassle.

As always, have a good one!