AKS Automatic - Kubernetes without headaches

This article examines AKS Automatic, a new, managed way to run Kubernetes on Azure. If you want to simplify cluster management and reduce manual work, read on to see if AKS Automatic fits your needs.

KEDA (Kubernetes-based Event-Driven Autoscaling) is an opensource project built by Microsoft in collaboration with Red Hat, which provides event-driven autoscaling to containers running on an AKS (Azure Kubernetes Service), EKS ( Elastic Kubernetes Service), GKE (Google Kubernetes Engine) or on-premises Kubernetes clusters ?

KEDA allows for fine-grained autoscaling (including to/from zero) for event-driven Kubernetes workloads. KEDA serves as a Kubernetes Metrics Server and allows users to define autoscaling rules using a dedicated Kubernetes custom resource definition. Most of the time, we scale systems (manually or automatically) using some metrics that get triggered.

For example, if CPU > 60% for 5 minutes, scale our app service out to a second instance. By the time we’ve raised the trigger and completed the scale-out, the burst of traffic/events has passed. KEDA, on the other hand, exposes rich events data like Azure Message Queue length to the horizontal pod auto-scaler so that it can manage the scale-out for us. Once one or more pods have been deployed to meet the event demands, events (or messages) can be consumed directly from the source, which in our example is the Azure Queue.

Prerequisites:

To follow this example without installing anything locally, you can load up the Azure Cloud Shell or you can install azcli, kubectl, helm and the Azure Functions SDK.

I will do everything in the cloud with monitoring and running other commands with Lens | Kubernetes IDE from my workstation.

Now open up your Azure Cloud Shell, rollup your sleeves and let’s get started:

Be attentive that there are <> brackets in the code. Do not copy-paste. Adjust the code for your deployment

#Login to your AKS cluster az aks get-credentials -n <aksClusterName> -g <resourceGroupofSaidCluster> #Install KEDA using Helm helm repo add kedacore https://kedacore.github.io/charts helm repo update helm install kedacore/keda --namespace default--name keda #Validate that KEDA is up and running kubectl get pods -n default #--> you should see the keda-operator pod up and running

The next step after you’re done installing KEDA on your AKS cluster is to instantiate your function:

#create a folder where the function will exist

mkdir kedafunction

cd kedafunction

#Initiate the function - Select Azure Queue by typing the number associated with it

func new

func init . --docker

#create a storage account to use it's queue feature

az storage account create --sku Standard_LRS --location westeurope -g <RGofChoice> -n <storageAccountName>

#set the account credentials in a variable

CONNECTION_STRING=$(az storage account show-connection-string --name <storageAccountName> --query connectionString)

#create a storage queue where the function will poll for messages

az storage queue create -n js-queue-items --connection-string $CONNECTION_STRING

#create the yaml file with the kubernetes deployment

func kubernetes deploy --name hello-keda --registry <yourContainerRegistry>.azurecr.io --javascript --dry-run > deploy.yaml

#run the ACR task builder to build the dockerfile created by the func init --docker command

az acr build -t hello-keda:{{.Run.ID}} -r <youracrname> .

#start the function

kubectl apply -f deploy.yaml

Enjoy the fireworks :)

After everything is up and running I run the PowerShell script from below to flood the queue with messages.

$storageAccount = '<InsertStorageAccountNameHere>'

$accesskey = '<InsertStorageAccountKeyHere>'

function Add-MessageToQueue($QueueMessage,$QueueName)

{

$method = 'POST'

$contenttype = 'application/x-www-form-urlencoded'

$version = '2017-04-17'

$resource = "$QueueName/messages"

$queue_url = "https://$storageAccount.queue.core.windows.net/$resource"

$GMTTime = (Get-Date).ToUniversalTime().toString('R')

$canonheaders = "x-ms-date:$GMTTime`nx-ms-version:$version`n"

$stringToSign = "$method`n`n$contenttype`n`n$canonheaders/$storageAccount/$resource"

$hmacsha = New-Object -TypeName System.Security.Cryptography.HMACSHA256

$hmacsha.key = [Convert]::FromBase64String($accesskey)

$signature = $hmacsha.ComputeHash([Text.Encoding]::UTF8.GetBytes($stringToSign))

$signature = [Convert]::ToBase64String($signature)

$headers = @{

'x-ms-date' = $GMTTime

Authorization = 'SharedKeyLite ' + $storageAccount + ':' + $signature

'x-ms-version' = $version

Accept = 'text/xml'

}

$QueueMessage = [Text.Encoding]::UTF8.GetBytes($QueueMessage)

$QueueMessage = [Convert]::ToBase64String($QueueMessage)

$body = "<QueueMessage><MessageText>$QueueMessage</MessageText></QueueMessage>"

$item = Invoke-RestMethod -Method $method -Uri $queue_url -Headers $headers -Body $body -ContentType $contenttype

}

1..1000| ForEach-Object -Process {

Add-MessageToQueue -QueueMessage "hellomeetup$_" -QueueName 'js-queue-items'

}

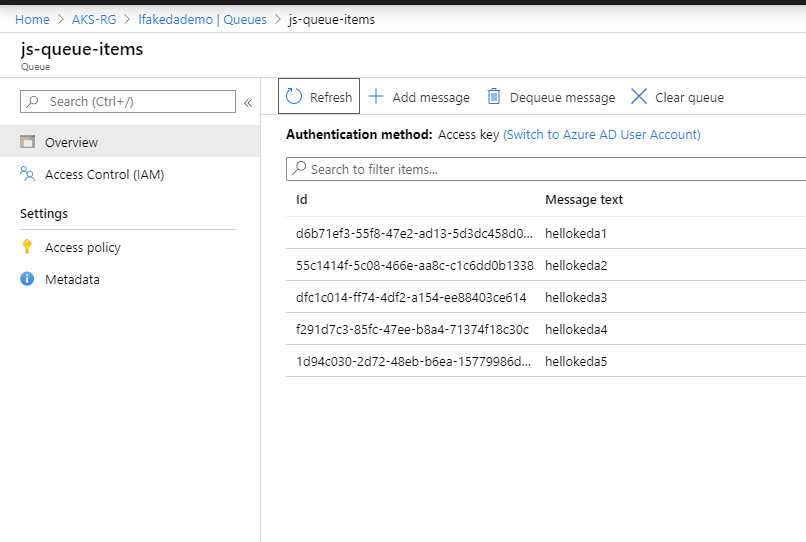

Once the PowerShell script started running, we get the first messages in the queue

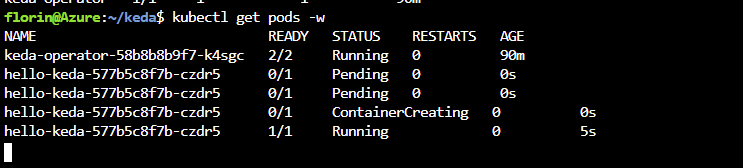

With kubectl in wait mode I start observing the fireworks happening

This solution basically exists to have the Azure Functions product everywhere. At this point in time, you can run Azure Functions wherever you want for free. You are not obligated to run functions in Azure, you can run them in Google or AWS if you want. Want on-premises? Go crazy, it works as long as you’re running Kubernetes.

The main idea is to bring Functions as a Service (FaaS) on any system and that’s very cool. While preparing for this post, I took a running system that uses Bot Framework, Service Bus and Azure Functions and ported the Azure Function part to KEDA. Zero downtime, no difference, it just works. The chatbot adds messages in a service bus and then the function triggers. I ran them in parallel for five minutes then I took down the Azure Function one.

The main point of using KEDA is to be cloud-agnostic. You know Kubernetes, you know Azure Functions, you don’t need to change anything. Hell, if you’re in those regulated environments where the cloud is a big no-no then you can just as well integrate KEDA in your clusters and then set up triggers with whatever messaging system you have or even just set up plain HTTP triggers and be done with it. Once the regulatory part relaxes and the company starts to dips it’s toes in the water, you can just as well copy-paste the code in Azure ?

That being said, have a good one!