Managed Prometheus and Grafana - No hassle cluster monitoring

A couple of years ago, I had contact with Prometheus and Grafana; it was 2017 when I deployed my first production ACS (Azure Container Service

Since I started working with Kubernetes a while ago, I had a problem. A problem regarding managing the cluster: the Azure Kubernetes Service offering looks like a managed offering; however, it's more like a semi-managed system where the API Server is managed by Microsoft and the data, nodes and configurations are managed by you. Alright, that sounds good; I have the power to do whatever I want mostly. However, I wanted more. I want to avoid having to manage the infrastructure story of Kubernetes and focus my attention and limited time on the deployments that I do.

AKS Automatic is Microsoft's new "hands-off" -ish mode for running Kubernetes in Azure. Think of it as AKS on autopilot (not the Tesla one) - a fully managed, good practices-based configuration where Azure takes care of the toil (node scaling, upgrades, security hardening, and more) so you can focus on your apps.

In this article, we'll dive deep into AKS Automatic, why it matters, and how it works in practice. I'll walk through setting it up and share some real experiences (the good, the bad, and the ugly). We'll compare AKS Automatic with traditional DIY cluster management tools like Cluster Autoscaler, Terraform, KEDA, NGINX, etc.

AKS Automatic is a new mode of Azure Kubernetes Service that delivers a production-ready Kubernetes cluster out-of-the-box with minimal manual setup. It's not a completely separate product from AKS, but rather a more upfront configured cluster where a lot of the cluster management decisions were made upfront and not after the deployment step. Microsoft has created the mode with many best practices in mind and has automated the entire process, from the infra deployment to monitoring. You shouldn't have to spend days tweaking Kubernetes settings or setting up addons – the cluster comes preconfigured following AKS well-architected guidelines and "just works" for most common use cases.

Azure automatically takes care of node provisioning and scaling, Kubernetes upgrades, networking setup, and security hardening. For example, with a regular AKS cluster, you'd create and manage one or more node pools (specifying VM sizes, scaling settings, etc.). With AKS Automatic, you don't manually manage node pools – the platform will dynamically create, and scale nodes based on your workloads' needs using a Node Autoprovisioning feature.

This means if your app suddenly needs more compute, Azure will spin up the right VMs on the fly; if the load drops, it can scale back down (even to zero for unused workloads) to save costs.

Right out of the box, they have enabled a set of settings you'd otherwise have to configure: for instance, node auto-repair is on, so if a node becomes unhealthy, it's auto-recycled. Automatic upgrades for the control plane and node pools are enabled, so your cluster will stay up to date with Kubernetes patches without you clicking any upgrade buttons.

In fact, upgrade orchestration is handled for you with rolling updates to minimize downtime and an option to define a maintenance window for control over when upgrades occur.

Clusters come with Azure AD (Entra ID) integration for authentication, and RBAC is enabled out of the box. Features like Workload Identity (so pods can seamlessly auth with Azure AD without secrets) and OpenID Connect issuer are pre-enabled, and the best part is that there is no local admin on the cluster, which can be a good or bad thing, depending on how you view it. If you don't know what I'm referring to, it's the --admin flag when you get the AKS credentials using az cli. Those credentials are pretty much permanent, and you need to do a certificate rotation to boot people from the cluster with those credentials.

The API server is integrated with your virtual network for private node communication; even little things like an image cleaner are running to wipe out unused container images that take up space and might have known vulnerabilities.

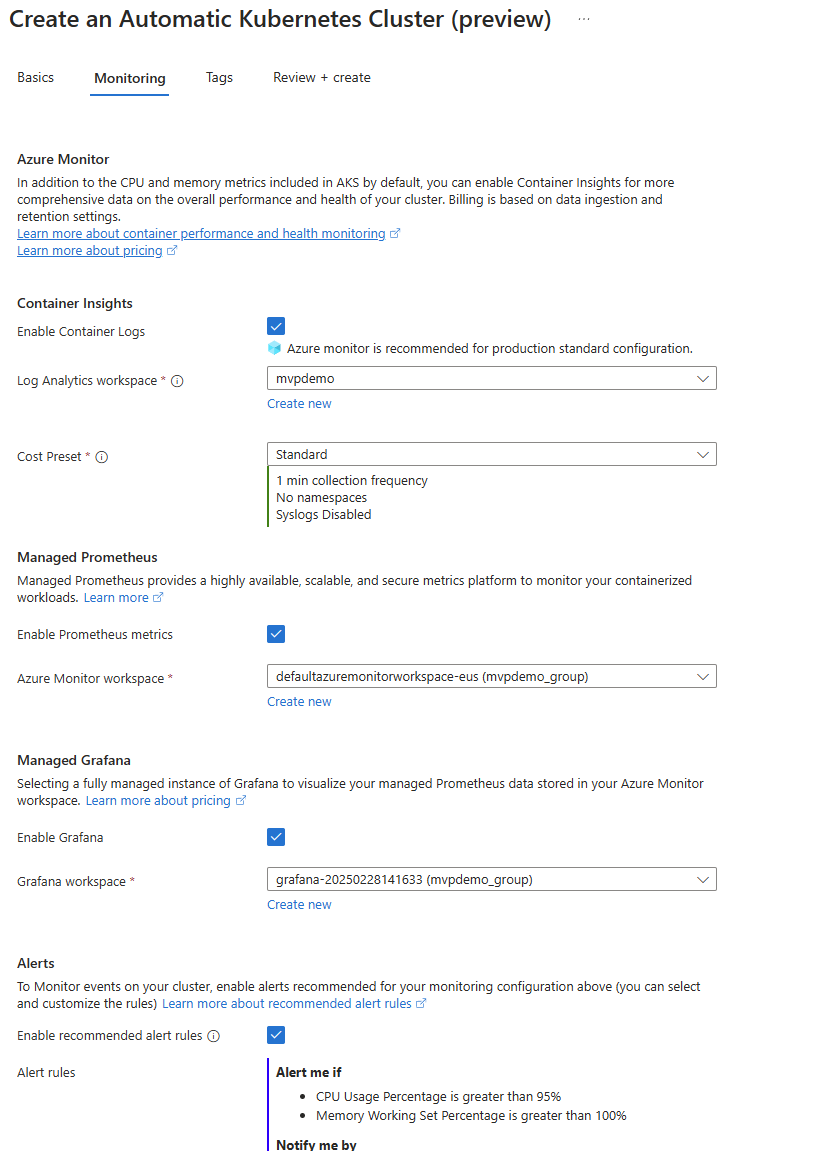

Monitoring and logging are set up by default: when you create an AKS Automatic cluster, it will enable Container Insights for logs and metrics, and even deploy Managed Prometheus and Grafana for you, which means you get the entire observability stack and best-practice alerts wired in. Similarly, it comes with an out-of-the-box managed NGINX ingress controller (using the AKS Application Routing addon) integrated with Azure DNS and TLS certificates via Key Vault.

It automatically attaches a managed NAT Gateway for outbound traffic to ensure scalable egress and avoid SNAT port exhaustion issues.

All these things you'd normally piece together are enabled by default, while you can do most of these things with Terraform and post-configuration scripts. You still have to allocate the time to create those automations and test them.

It's worth noting that AKS Automatic is currently in preview (as of this writing), which means it's new and still evolving. You have to register a feature flag to use it, and it's not yet recommended for production use until it becomes generally available. But it's already a fully functional experience.

So, why should we care about AKS Automatic? What problems is it trying to solve? In one word: complexity. Running Kubernetes (even a managed service like AKS) involves many decisions and maintenance chores. If you've managed AKS clusters the traditional way, you know the drill: choose the right VM SKU for nodes, configure cluster autoscaler thresholds, set up Azure Monitor for logs, secure the kube API endpoint, keep up with patching, apply Azure Policies / Kyverno Policies for guardrails, etc. It's a lot, and it requires both time and expertise.

Here are a few specific problems AKS Automatic addresses and why they're essential:

Alright, enough book reading, let' try out this AKS Automatic. In this section, I'll walk through setting up an AKS Automatic cluster and highlight how it behaves with an actual workload. I will go ahead and deploy a simple demo app provide by the Azure team to see what it can do. I want to see how the cluster handles provisioning, scaling, and other tasks automatically. If it fails, I will have a very strong opinion about it.

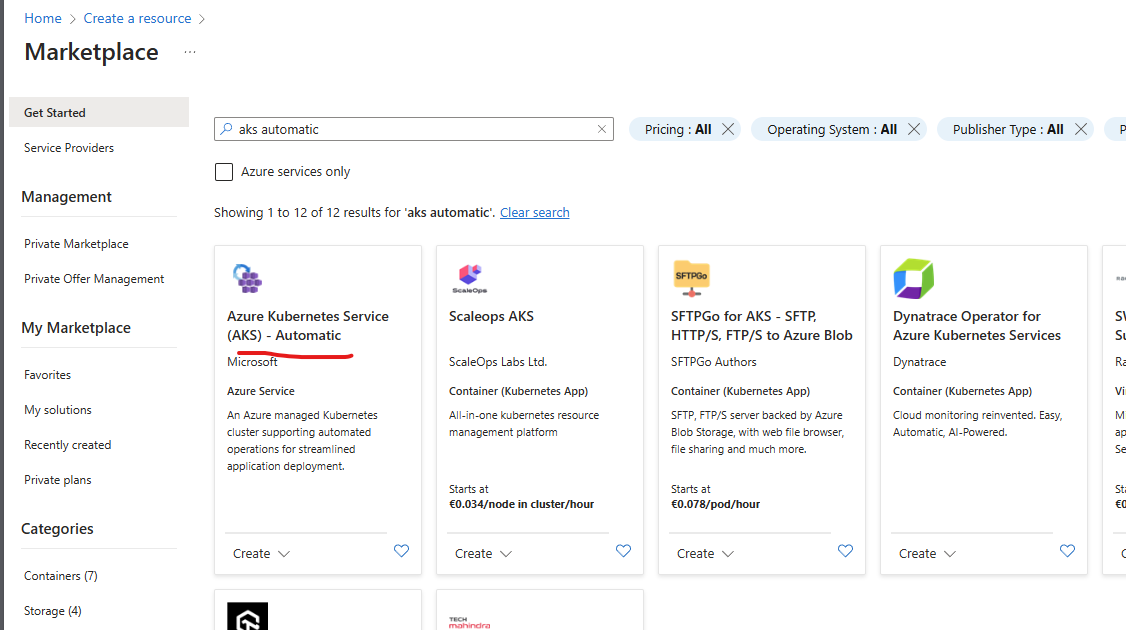

You can create an AKS Automatic cluster through the Azure Portal or the Azure CLI (with the aks-preview extension). In the Azure Portal, when you go to create a Kubernetes cluster, you'll now see a new option in the drop-down: "Automatic Kubernetes cluster (preview)." This is alongside the classic "Kubernetes cluster" option. Choosing the Automatic variant will present a simplified set of options – because many settings are fixed or preconfigured behind the scenes.

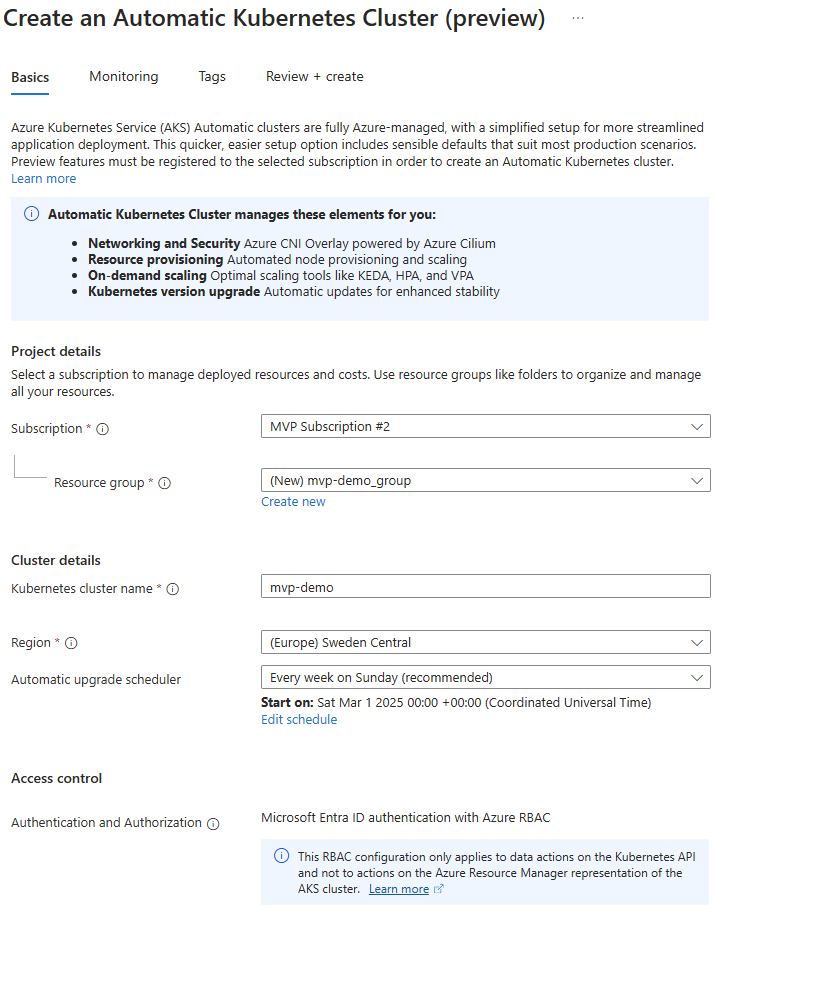

When creating through the Portal's wizard, you'll notice that on the Basics tab, there's a blurb highlighting what the Automatic cluster will manage for you (Networking with Azure CNI Overlay/Cilium, automated node provisioning, on-demand scaling with HPA/KEDA/VPA, and automatic k8s version upgrades). You mainly just pick a region, give the cluster a name, and choose your subscription/resource group. There's also an "Automatic upgrade scheduler" field where you can accept or adjust the recommended default maintenance window.

You will notice an error that the subscription you're trying to deploy to, doesn't have the required flags to deploy the cluster so, you can either press on the blue Register Preview Features link or run the following command in AzCli:

az extension add --name aks-previewaz extension update --name aks-preview

# Register the feature flag

az feature register --namespace "Microsoft.ContainerService" --name "NodeAutoProvisioningPreview"

# Verify registration statusaz feature show --namespace "Microsoft.ContainerService" --name "NodeAutoProvisioningPreview"

# Refresh the registration of the resource provider when the above command shows registered

az provider register --namespace Microsoft.ContainerServiceIn my case, it was set to "Every week on Sunday (recommended)" by default, meaning the cluster will auto-upgrade on a weekly schedule (you can edit the day/time). This is a nice touch: your cluster has a maintenance window for safe upgrades from the get-go rather than upgrading at random times.

az aks create \

--resource-group myResourceGroup \

--name myAKSAutomaticCluster \

--sku automaticAfter a few minutes, the cluster is up and running. You can grab credentials and see the nodes:

az aks get-credentials -g myResourceGroup -n myAKSAutomaticCluster

kubectl get nodes

That's it! No need to specify node count or VM size – Azure will handle the nodes. The CLI will enable the appropriate monitoring addons by default as well (Managed Prometheus, Grafana, etc., are auto-configured when using --sku automatic via CLI)

On a fresh cluster, you might see one or a small number of nodes ready (if Azure pre-provisioned some baseline). The node names will look like any AKS node pool (e.g., aks-nodepool1-...) but note that you didn't manually create "nodepool1" – it was created for you. All nodes run Azure Linux as the OS by default.

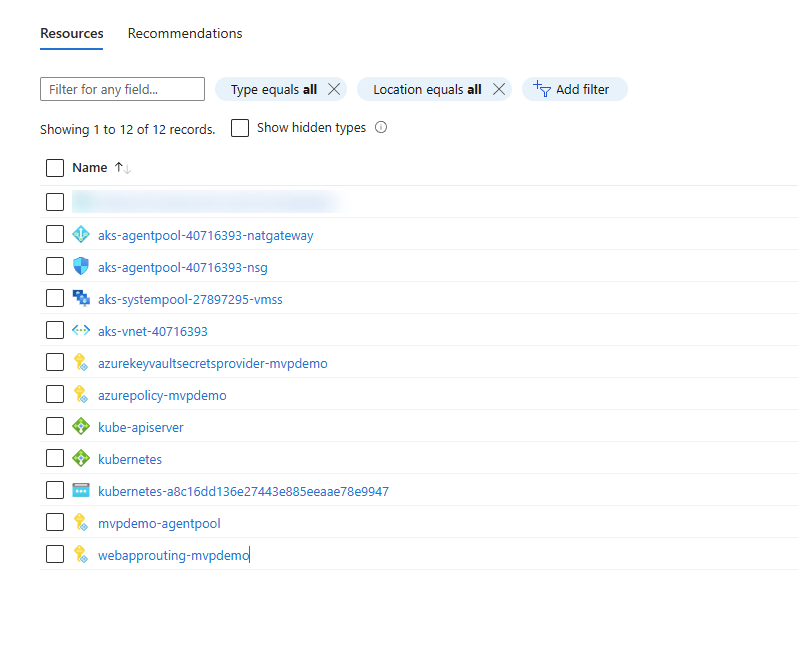

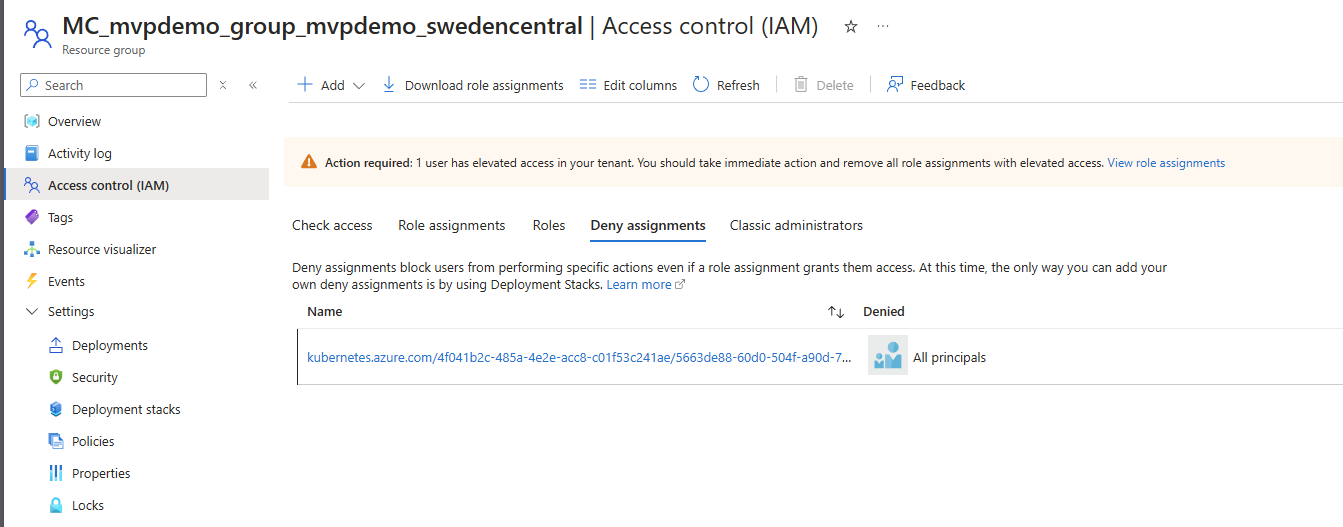

Also, if you check the resource group in Azure, you'll find the node VMs in a locked-down node resource group (with naming like MC_myResourceGroup_myAKSAutomaticCluster_region as usual). Azure has marked That resource group read-only to prevent you from accidentally altering the managed infrastructure.

You won't have SSH access to these nodes either – and that's by design for security purposes.

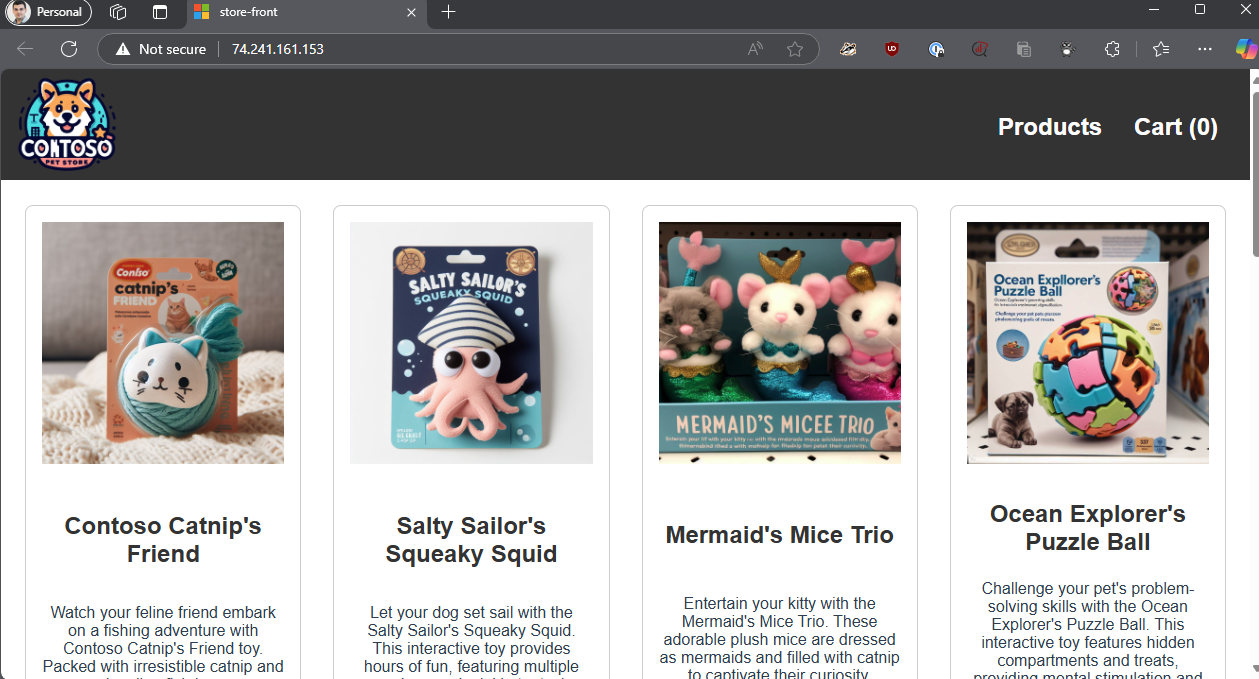

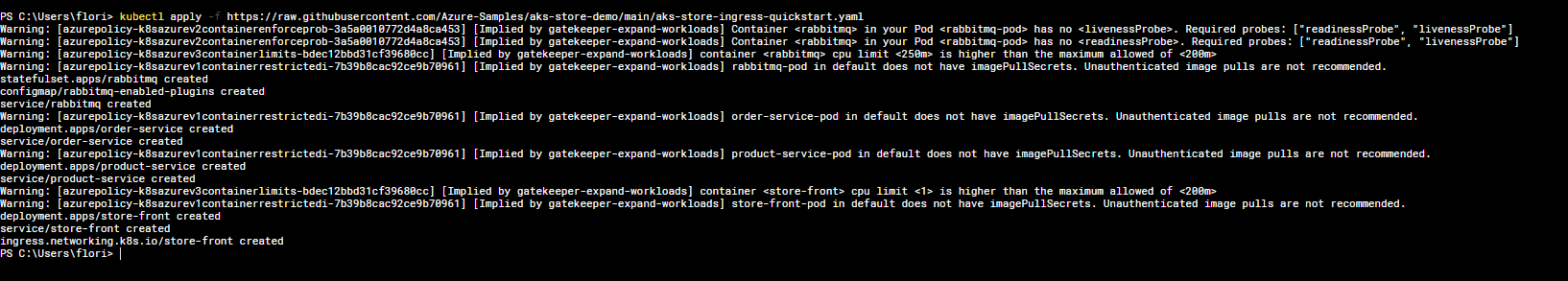

Now for the fun part – deploying an application and seeing AKS Automatic do its thing. I deployed a sample microservices app provided by Azure (the "AKS Store Demo" which has a few services and an ingress) with a single kubectl apply command:

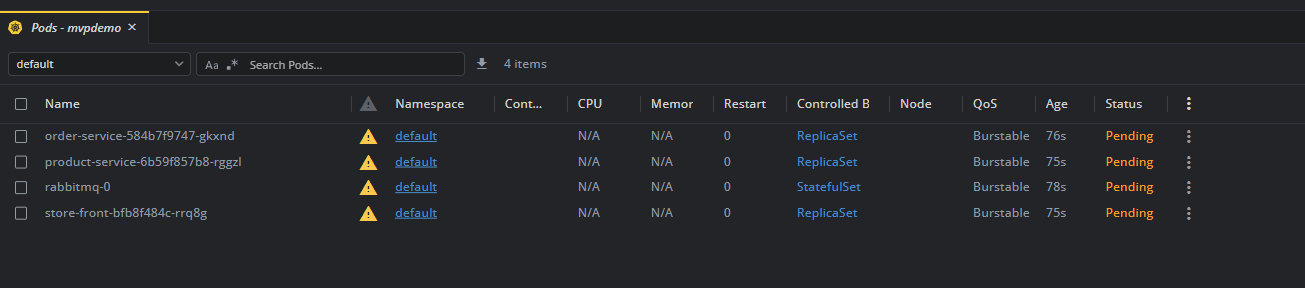

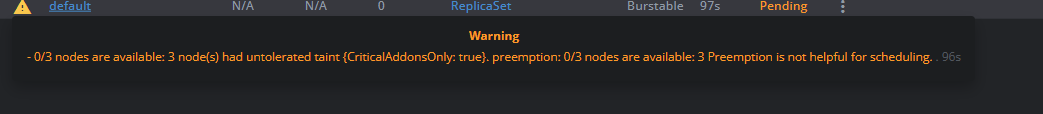

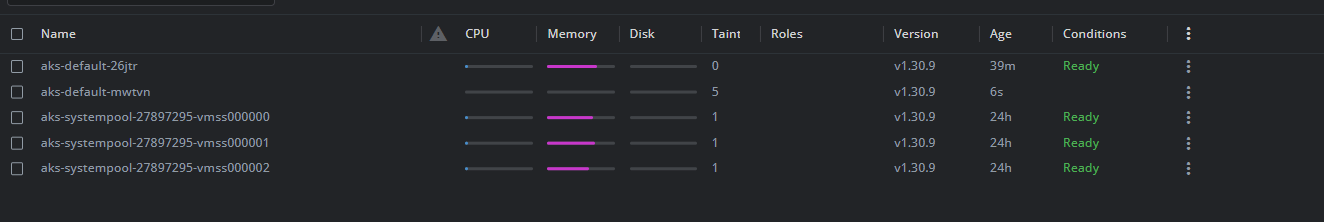

kubectl apply -f https://raw.githubusercontent.com/Azure-Samples/aks-store-demo/main/aks-store-ingress-quickstart.yamlThis manifest defined several deployments (with varying replicas) and an Ingress resource. Once applied, Kubernetes scheduled the pods. On a standard AKS, if there weren't enough nodes to place all these pods, the Cluster Autoscaler would need to add nodes – assuming it's configured. In our AKS Automatic cluster, Node Autoprovisioning kicks in immediately. The cluster's control plane notices pending pods that can't be scheduled, and instead of saying "unschedulable," it invokes the autoprovisioner to create new nodes of the appropriate size. Within a couple of minutes, I saw new nodes join the cluster to accommodate the workloads. The beauty is that I did zero manual config to enable this – no fiddling with autoscaler settings. AKS Automatic decided the VM SKU for me based on the pods' resource requests. (It even bin-packed pods efficiently, so it didn't over-provision too many nodes.)

The cluster not only scaled out, but it also automatically set up the Ingress for the app. Because a managed NGINX ingress controller was already present, my Ingress resource got picked up and assigned it an IP which I could then access the demo app.

Next, I decided to stress test the scaling. I increased the replicas of one of the microservices from 1 to 20 via kubectl scale. This put significant load on the cluster. Almost immediately, as those new pods were scheduled, Node Autoprovisioner again added capacity. Within a few minutes, my cluster grew from 1 node to 2 nodes to handle the load and later it figured that only one node is needed. I didn't need to touch any scaling settings, I didn't even need an HPA, it just started adding nodes.

When I later scaled the deployments back down to normal, the excess nodes were removed (after a cooldown period). In fact, AKS Automatic's use of Karpenter means it can scale down to zero nodes for a given workload if it's completely idle. I

Also, the cluster has Azure Policy Addon enabled in audit mode. When I attempted a bad practice, I got a warning event that the Deployment Safeguards policy was violated. It didn't stop me (since it defaults to audit mode), but it let me know that I might want to enforce it in a real production scenario. This is part of those "safeguards" to keep workloads aligned with best practices.

From a security standpoint, by default, my user had Azure RBAC access as a cluster admin (because I created the cluster). There was no Kubernetes basic auth or client cert – it was all integrated with Entra ID (I still have the reflex of saying Azure AD) tokens. This meant if I wanted to grant a somebody access, I'd assign them an Azure RBAC role (like AKS Cluster Admin or just a specific namespace access role) and they would authenticate to it using their user account.

To sum up the hands-on: AKS Automatic works. Nuff' said. This is coming from a person that works with Kubernetes, debugs Kubernetes, and has days that hates Kubernetes 😄

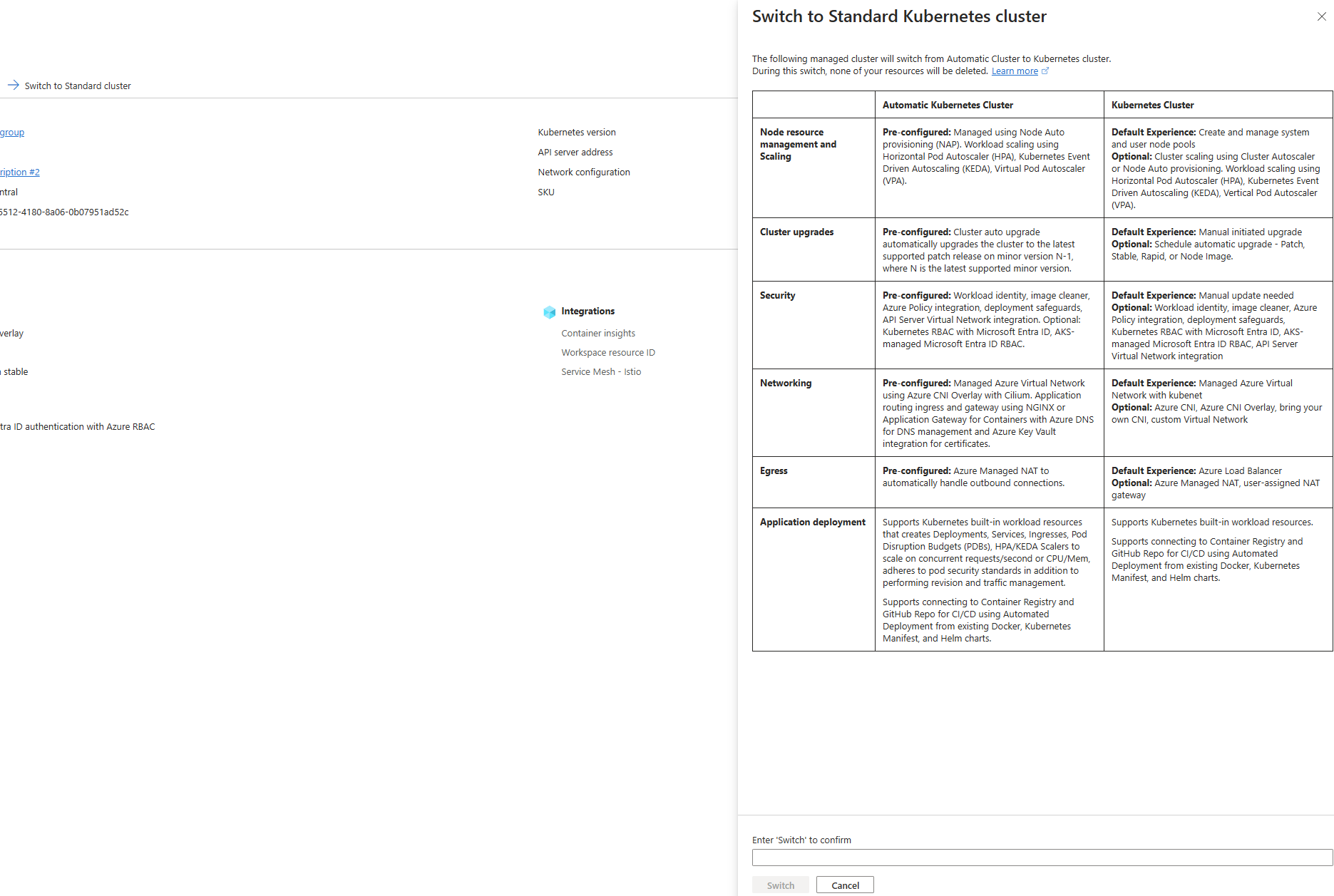

How does AKS Automatic stack up against the traditional ways of automating and managing AKS clusters? Many of us have built tooling and processes around vanilla AKS – using Cluster Autoscaler, Terraform, GitOps with Flux/Argo CD, and Helm charts for addons. It's worth comparing these approaches to understand what AKS Automatic changes. I'll break down the comparison by different aspects of cluster management and mention the pros/cons of each approach:

| Aspect | AKS Normal (Standard) | AKS Automatic |

|---|---|---|

| Node Scaling & Provisioning | • You manually configure Cluster Autoscaler or enable node auto-provisioning. • You manage node pools (sizes, counts, VM SKUs) manually. |

• Fully managed node scaling with built-in Node Autoprovisioning (Karpenter). • Automatic bin-packing and scale-to-zero capabilities without manual node pool management. |

| Infrastructure Management | • Provisioned via Terraform, ARM, or Bicep with many customizable options. • Full control over networking, VM types, and additional settings. |

• Simplified and opinionated provisioning with fewer options to tweak. • Azure provides default, best-practice configurations for networking, OS, and more. |

| Application Deployment | • Requires setting up your own CI/CD pipelines, GitOps, and Helm charts for add-ons (like ingress and monitoring). | • Preconfigured with managed add-ons (NGINX ingress, Managed Prometheus/Grafana) and integrated CI/CD deployment options. |

| Upgrades & Maintenance | • Upgrades and maintenance need to be planned and executed manually or via self-configured automation. | • Automatic, scheduled upgrades for both control plane and nodes with maintenance window configuration and built-in safety checks to avoid downtime. |

| Security & Governance | • Security settings (Azure AD integration, RBAC, network policies) are configurable but must be manually set up. | • Security best practices are enforced by default (e.g., mandatory Azure AD, RBAC, policy enforcement), reducing risk while limiting customization. |

| Flexibility & Customization | • High flexibility: You can choose custom networking, node images (including Windows nodes), and adjust nearly every setting. | • Limited customization: It follows a fixed, opinionated setup (e.g., Linux-only nodes, no manual node pool creation), which may not suit all specialized requirements. |

| Operational Complexity | • Requires significant manual configuration and ongoing management, leading to higher operational overhead. | • Reduced operational workload as Azure handles many day-2 operations, enabling you to focus on application development rather than infrastructure management. |

| Cost Considerations | • Offers flexibility to optimize costs manually (e.g., using spot instances, custom scaling settings) but is prone to misconfigurations that can waste resources. | • Built-in autoscaling and resource optimization may lower compute costs during idle periods, but mandatory managed add-ons (like monitoring) could slightly increase overall spend. |

I've been hands-on with AKS for years, and seeing this new Automatic mode in action left me both impressed and with a few reservations. Here's my take: AKS Automatic is an awesome step forward in easing Kubernetes ops on Azure, but it's not a solution for everything.

First, what I like about it is that it truly delivers on reducing complexity. I remember the countless times I've set up cluster autoscalers, tweaked node pool sizes, or wired up Workload Identity, and so on. Also, the security posture out-of-the-box is a great start– no public endpoints by default, no lingering credentials, and policy guardrails in place. It pushes Azure's narrative of enterprise-ready Kubernetes with features like Azure AD integration and Azure Policy enforcement baked in. This is the kind of stuff that could save a new Kubernetes user from making dangerous mistakes.

I also appreciate that Microsoft isn't dumbing down Kubernetes – they preserve the entire API. This was a concern initially: would AKS Automatic be like Azure Container Apps (which hides Kubernetes)? But no, you still get all the Kubernetes goodness (CRDs, custom controllers, etc.). So, in a way, it's the best of both: managed convenience without sacrificing Kubernetes extensibility; and another powerful part is that you can go back to standard with a push of a button. AWESOME!

Is it ready for production use? Today (in preview), I'd be cautious. Not because it performed badly – it was stable in my tests – but because preview means no official support SLAs. Also, any preview can have limitations that might not be fully documented. For example, earlier previews had an issue where the API server was public by default without the usual restrictions, which would have been a showstopper for some secure environments.

However, I'd likely hold off for larger, more complex environments until you validate a few things:

AKS Automatic looks excellent for dev/test environments and smaller teams/startups. It provides a production-grade setup without the overhead – almost like having a managed "platform team" provided by Azure.

This blog runs on an AKS Cluster which I never have time to manage so I will dogfood it with my own production blog 😀

For large enterprises with existing AKS setups to move to Automatic it would be trickier because they likely have specific customizations or processes. However, I can see enterprises using Automatic for new projects that don't have legacy requirements. It could also be attractive for edge cases like multi-tenant clusters where you want Azure to ensure one tenant's misconfiguration doesn't compromise the cluster (e.g., the built-in policies might prevent one team from doing something that affects others).

I suspect over time, the gap between AKS Standard and Automatic will blur, or Automatic might even become the default for most users, with "manual mode" only for advanced scenarios. Right now, we're in early days, and I wouldn't force Automatic into a scenario it's not suited for (e.g., don't use it if you absolutely need Windows nodes or some niche feature it doesn't support). But if your use case aligns, I think go for it (with proper testing) – it will likely save you time and avoid many common pitfalls.

That being said, long post, signing out, have a good one!