AKS Automatic - Kubernetes without headaches

This article examines AKS Automatic, a new, managed way to run Kubernetes on Azure. If you want to simplify cluster management and reduce manual work, read on to see if AKS Automatic fits your needs.

Remember when almost everybody said that the cloud would take over and nobody would do on-premises anymore? Well, here we are, and it still didn't take over. My opinion then was that hybrid would be the norm and would stay the norm for a long time.

Moving all your workloads to the cloud is not always a cheap or wanted option; there are still problems around compliance and data governance where your hands are tied, and you cannot do that complete migration or the cost of moving that workload to the cloud is too high, e.g., VM with 32 TB of storage times fives.

I advocate starting migrations where you move the workloads that make sense in the cloud, and you can do lift & modernize approaches rather than lift & shifts. Small and medium things that need to scale up and down rapidly make more sense in the cloud, and 100 TB data warehouse systems do not.

I worked on a project some time ago, a lift & shift that never made sense: It involved migrating web servers, SQL servers, Hadoop servers, and the creme de la creme, RDS servers. In contrast, RDS might make sense in Azure rather than using Azure Bastion; however, that specific case never made technical and functional sense to be migrated, just ditched.

Long story short, after giving up and telling the client the cost of moving everything to Azure, the discussion shifted to let's do hybrid as the prices skyrocketed.

Coming to the end of the story, the hybrid approach was taken, the VPN gateway was configured, a hub & spoke architecture enacted, and behold, the first problem that afflicts the entire internet and scares IT people. DNS.

Simple question, how do you propagate DNS to the cloud and back? Back then? Simple VM that acts as a DNS forwarder for on-premises servers to resolve Azure Private DNS zones and vice-versa. This added overhead to a complicated solution because if that VM failed, then DNS would not resolve in any way.

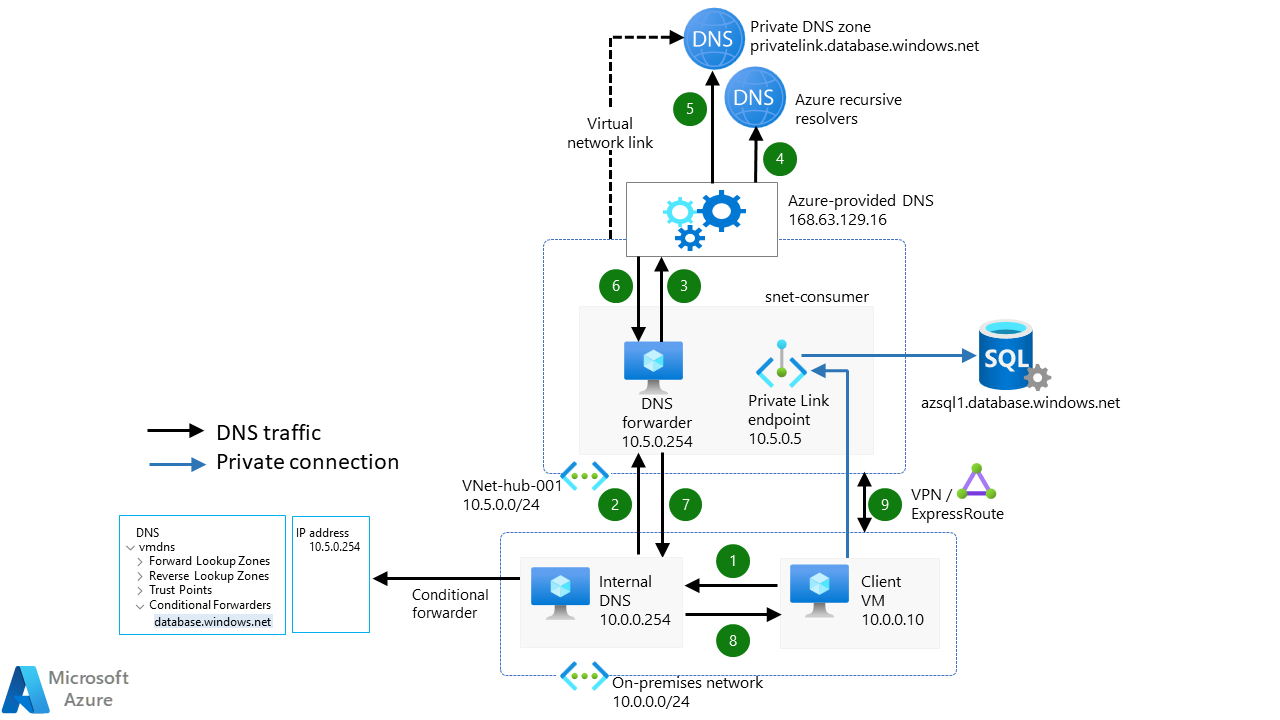

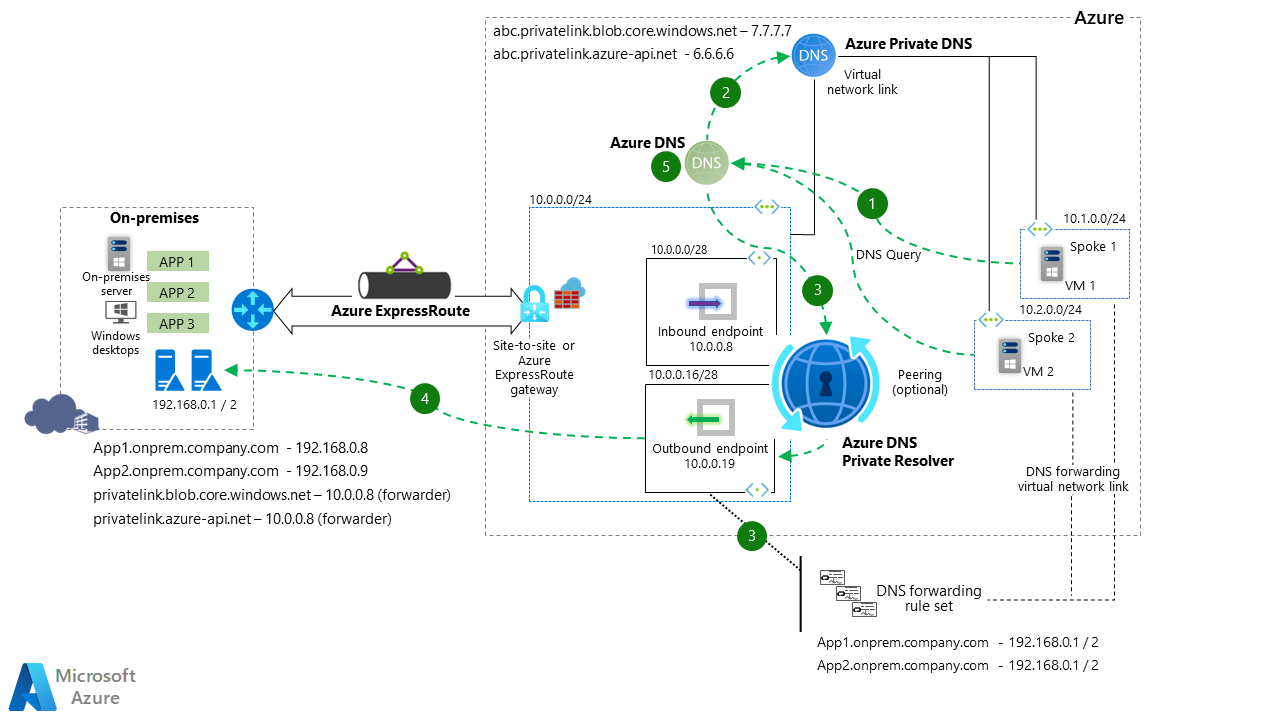

In the diagram above, you can see how you would access a SQL database from on-premises. A VM asks the DNS server where that SQL database and the conditional forwarder would forward that query to the DNS VM in Azure, and from there, it's pretty self-explanatory.

As you can see, this problem went a long time without a clear solution, and you had to make the best of what you had.

The title of this article is the solution to the exact problem I talked about earlier. Azure DNS Private Resolver is a PaaS solution that eliminates VMs from the equation and provides a high-available, redundant, scalable mechanism for DNS queries. The system works similarly to a virtual machine. The main difference is that you're creating endpoints for inbound queries (on-premises DNS forwarding queries to it) and outbound queries, which are rule set defined.

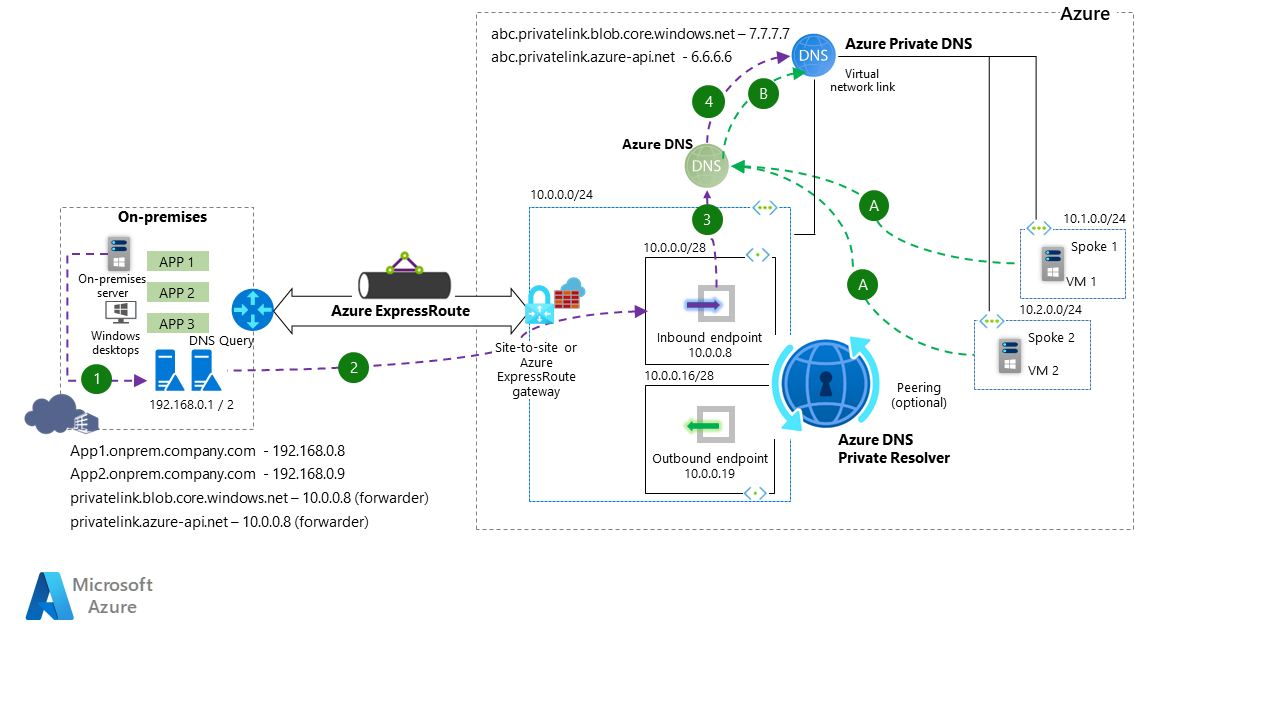

Adapting the whole architecture to use Azure Private DNS Resolver instead of a DNS Forwarder VM, you can see that the flow is similar, with the main difference being that you're forwarding requests to a PaaS system rather than a VM.

The on-premises DNS query goes to the local DNS, which then forwards the query to the Private Resolver inbound endpoint, which then verifies the Azure Private DNS Zone if the entry exists and returns the information.

Now that we understand the main point of the Azure DNS Private Resolver, let's see how we can configure it.

Setting up proofs of concepts for a configuration of this type can be complicated. I connected to an Azure VPN Gateway using a Ubiquity EdgeRouter and set up a conditional forwarder on my Pi.Hole system to forward requests toward the Azure Resolver. I went this route to experiment with the end-to-end scenario and see if it's a "simple" plug & play or requires more tinkering.

The result was that configuring the "on-premises" part was more complicated than the Azure part.

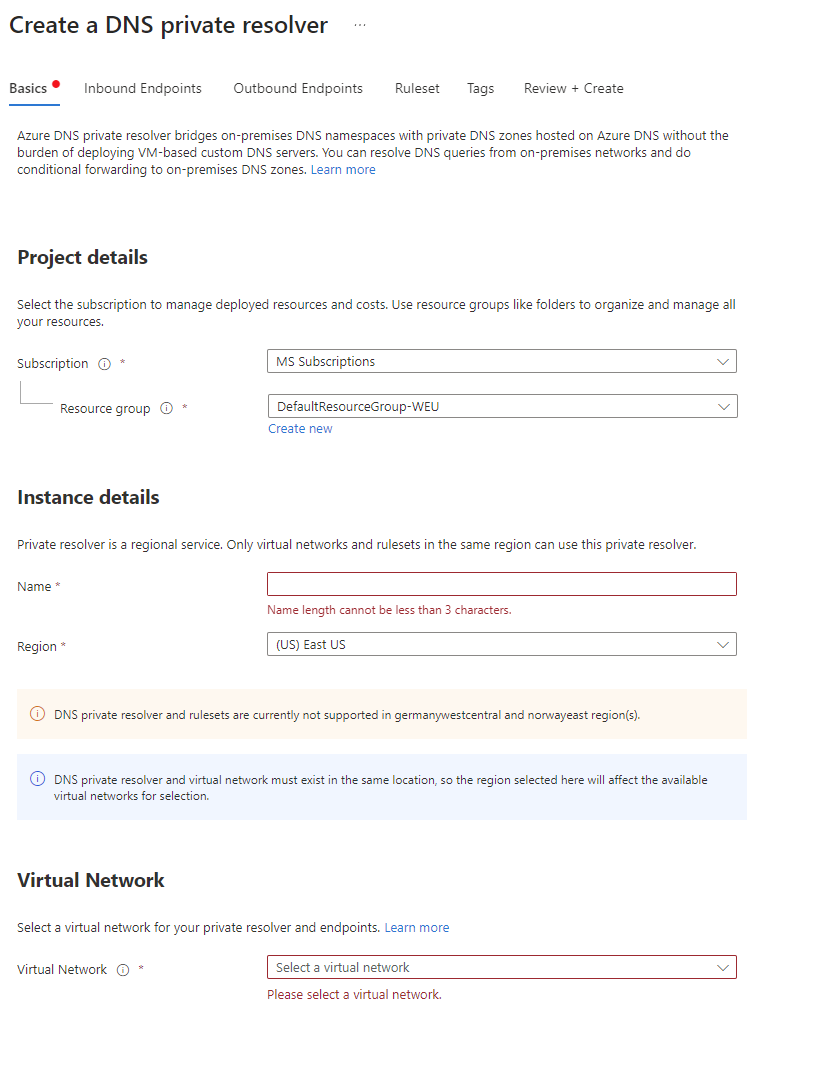

Configuring the DNS Resolver is quite simple in Azure; you go to the portal, create a new resource and search for DNS Resolver.

After that, follow the GUI forward; without much hassle, you will have the resolver deployed and configured.

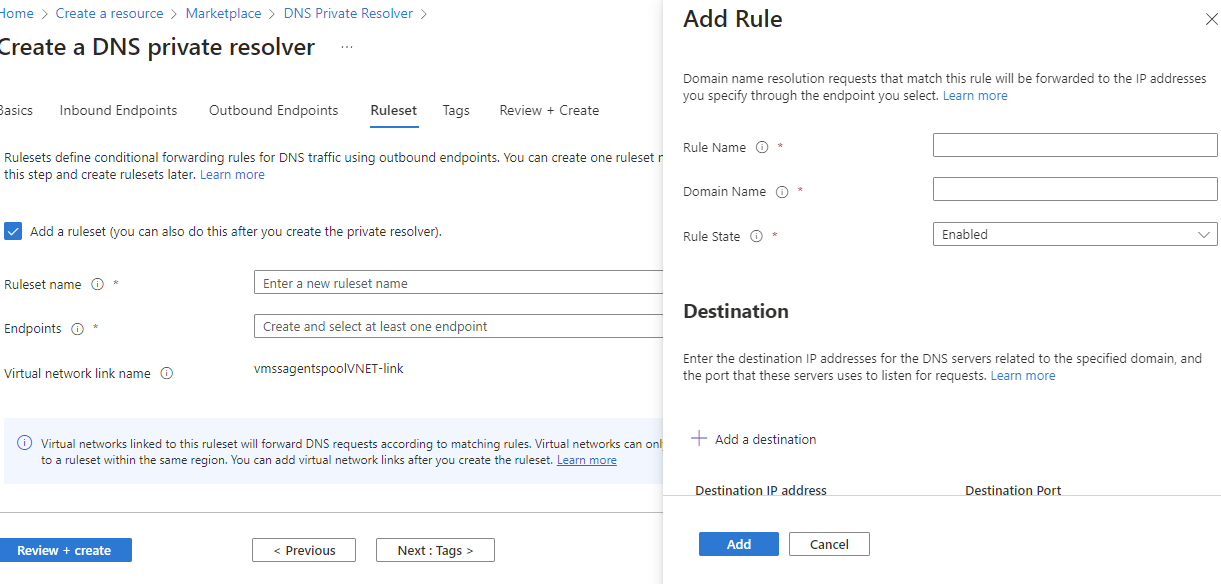

Inbound endpoints are easy; you set them and configure them on-premises. On the other hand, the outbound endpoint requires a ruleset to be defined. Not that complicated, and if you didn't configure it at the creation of the DNS Resolver, you can create an outbound endpoint after the fact, and the same goes for the rule set.

The rule set allows you to set DNS entries for your on-premises environment.

Once everything is created, you're good to go, and you need to start testing and validating your configuration.

I mentioned rule sets and outbound endpoints. This works by configuring a rule set that matches the domain names of your on-premises workloads; this works as DNS forwarding to on-premises but is close to a 1-1 match based on the rule set. So if a VM is querying for an on-premises application and a rule set exists, it will forward that query to the on-premises DNS system.

The diagram shows the traffic flow from Azure to On-Premises. As Azure DNS and Azure DNS Private Resolver integrate natively in the VNET, you get forwarding rules in all the VNETS linked with the rule set, which means that if a VM looks for app1, which is located on-premises then the rule will be hit and forward that request using the outbound endpoint towards the on-premises DNS and resolve the query there and give the answer. If nothing hits the rule set, the Azure DNS zone will resolve the request and return nothing.

I wish this solution would have been available much sooner as some solutions I've designed could have benefitted greatly from it. Lowering the complexity of any design will go a long way, and it saves a lot of man-hours when it comes to debugging issues.

This solution is much cheaper to maintain, effectively costs less than 2 VMs (for HA), and integrates with Azure seamlessly.

That being said, I hope you learned something, and as always, have a good one!