Dynamic ConfigMaps in AKS

Handling configurations in app development, especially with Kubernetes, can quickly turn into a real headache. It's more than just trackin

Cost management is familiar; we do it back and forth day on day off. Well, at least that's what I do most of the time when I'm not thinking of new ways of creating savings via automation. Budgeting and cost management were easy before we grouped workloads in container orchestrators. You had the workload and the tags, and then you started applying the procedures. Now, with containers and orchestrators, you have a bulk node pool with 'N' VMs that consume resources and accrue costs. You cannot determine if the cluster is right sized for the workloads it's running on it or even the service costs if they are shared between applications or projects.

Recently, a new AKS feature has popped up that allows you to see the costs inside the cluster and gives you the necessary insights to start taking action about cluster correct sizing, better autoscaling, better reservation use, and nudging projects that don't use cluster resources as efficiently as possible. This new feature is called Azure Kubernetes Service cost analysis - Azure Kubernetes Service | Microsoft Learn

In other words, we have an add-on built on top of OpenCost that analyzes costs on your cluster and correlates them with your Azure invoice. Getting started is easy; follow the documentation from the feature link, and you should be golden. However, we're still working; what will you do once you have the information?

My subscription cannot show the cost management details, so I'll stick to the Azure docs pictures.

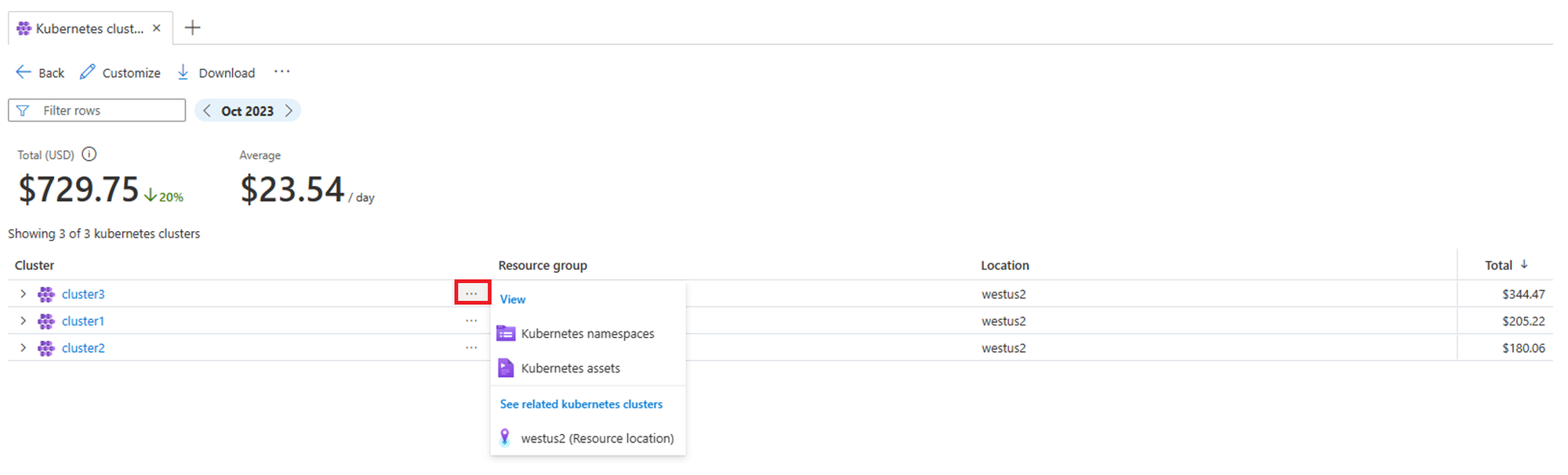

Kubernetes Clusters View: This is your dashboard for all things cost-related across your Kubernetes clusters within a single subscription. It's your go-to for a quick overview. Want more details on specific parts of a cluster, like namespaces or assets? Hit the ellipsis for more options and dive deeper.

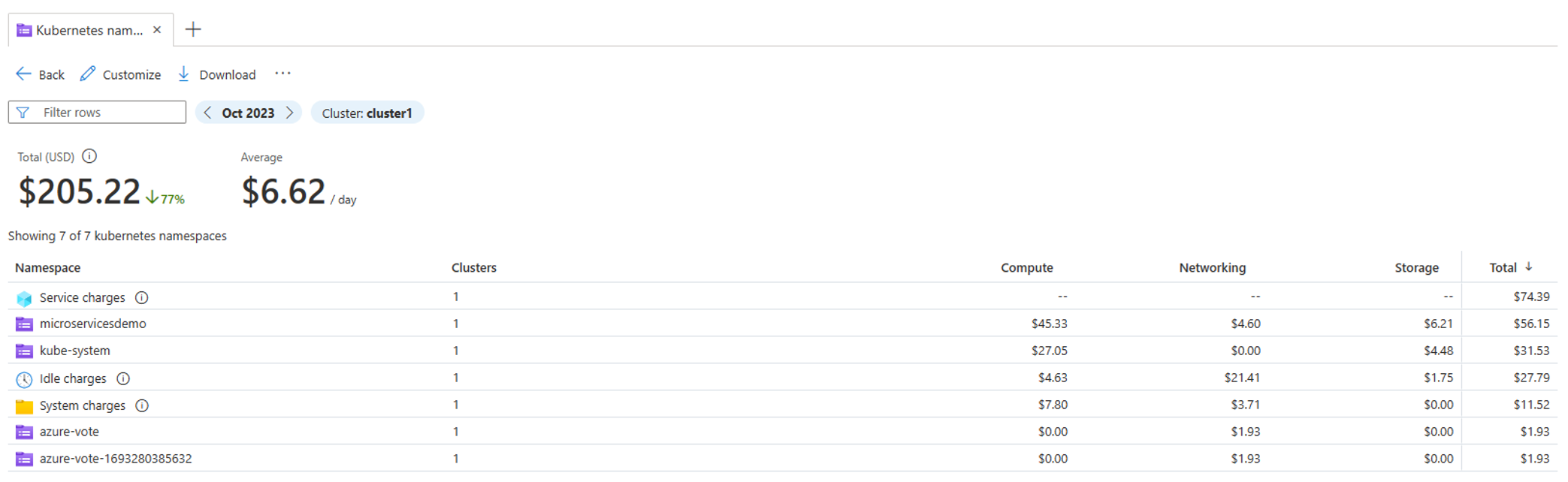

Kubernetes Namespaces View: Here, you zoom in on the costs tied to individual namespaces within your cluster. It's not just about the active costs; this view also breaks down Idle and System charges alongside the Service charges (think Uptime SLA costs). It's essential for pinpointing where your budget is going within each cluster segment.

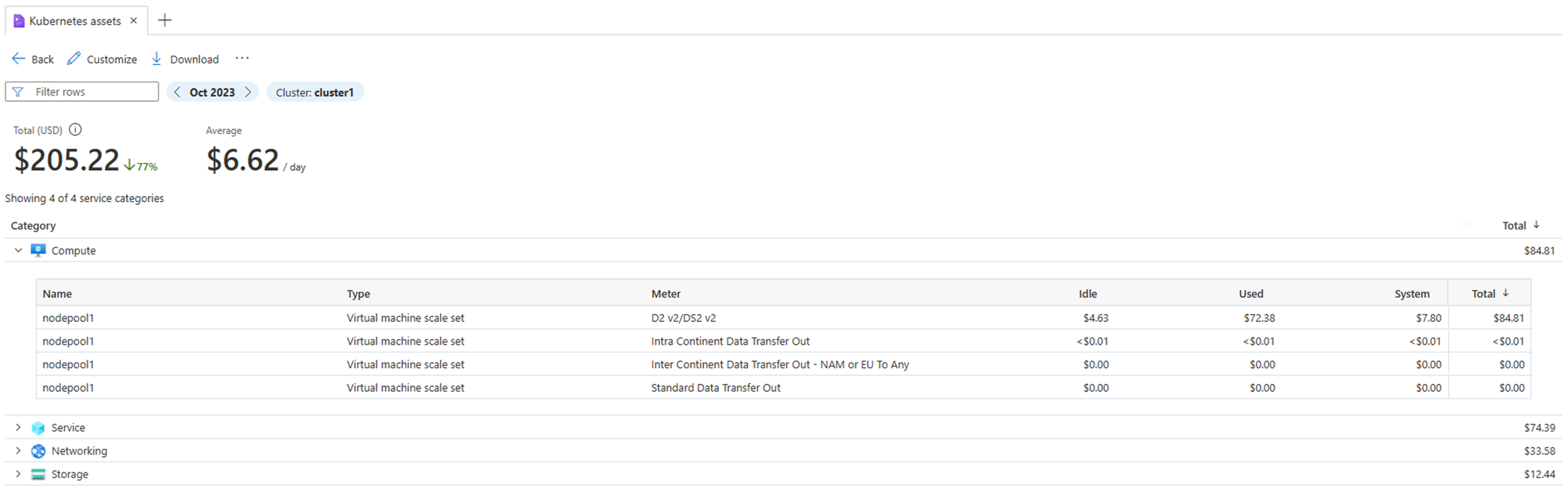

Kubernetes Assets View: Need to get into the nitty-gritty of where each penny goes? The assets' view categorizes your spending into computing, Networking, and Storage under specific service categories. It's beneficial for those managing or auditing costs closely, offering a clear view of where investments are allocated, including uptime SLA charges.

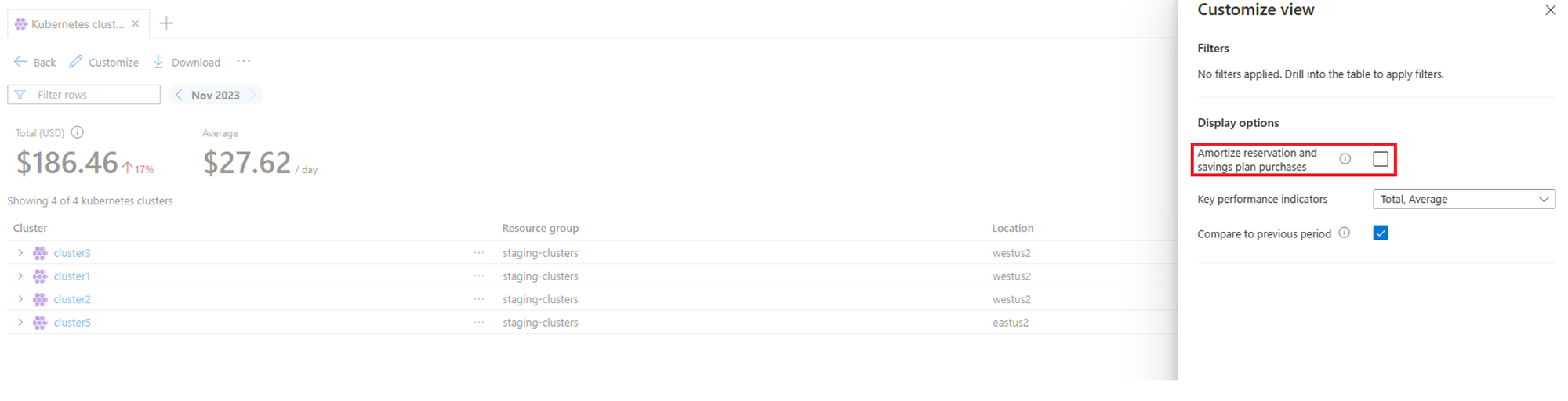

Switching to Amortized Costs: Azure shows you the actual costs by default. But if you're working with reservations and savings plans, you should see amortized costs for a smoother view of your spending over time. Go to Customize at the top of your view and opt for amortizing reservation and savings plan purchases.

These features in Azure are designed to give you a comprehensive, drill-down capability into your Kubernetes spending, ensuring you have the visibility and control needed to manage your costs effectively. Whether you oversee multiple clusters or need to understand specific expenditures, these tools have you covered.

Installing something in a cluster is simple; making heads or tails of the information is something else. Let's start with the common problems I see in the field.

First, always start by right sizing your clusters. This means choosing the correct VM sizes and types for your nodes to match your workload requirements without overprovisioning.

You dig into ACM and see that your or your company's applications are using 20% of the cluster resources while 80% is just sitting there and accruing costs.

With that information, you need to take a snapshot of the deployment usage and then input that data into a Kubernetes calculator, which will give you an idea of what you should use for the subsequent node pool deployments. I use the Kubernetes instance calculator (learnk8s.io) to do these types of size estimations, and it works well.

Autoscaling is a critical tool for managing AKS costs. It adjusts the number of nodes in your cluster based on current needs, similar to how a smart thermostat adjusts your home temperature (I have a Home Assistant system in my house, which works quite extraordinarily).

Use Horizontal Pod Scaling and Cluster Autoscaler

The key is that if you don't use HPA, autoscaler doesn't work as it lacks the right metrics to perform that scale-up. Also, this is not a set-and-forget thing; there might be cases where it goes haywire due to random facts, very high spikes, or gets stuck. It's the cloud, and it's hyper-scale, so it happens. Set up monitoring as well to see if it's in the proper configuration.

Vertical Scaling:

Like it or not, some workloads cannot horizontally scale, and that's fine. There are solutions for that as well. If you know that you need to throw resources into a deployment "just in case", then the solution is to implement VPA or Vertical Pod Autoscaller Vertical Pod Autoscaling in Azure Kubernetes Service (AKS) - Azure Kubernetes Service | Microsoft Learn

This solution works exactly like HPA but up and not out. With this solution, you won't waste resources on a deployment for just-in-case moments but have the system automatically scale when needed.

Event Driven Autoscaling

I've written about KEDA before, but I didn't dive too deeply into the details. KEDA's main objective is to scale workloads horizontally based on actual traffic patterns. It integrates perfectly with many systems, such as Scalers | KEDA, and you can set the application to scale when it needs to and not scale only on CPU / Memory. You may have a message queue, and based on the number of messages, you need to scale the workload for better processing.

Your on-demand or dev-test cluster might not need to run 24/7, and you can set automation to start/stop clusters. Stop and start an Azure Kubernetes Service (AKS) cluster - Azure Kubernetes Service | Microsoft Learn

When the cluster is shut down, your workloads are safe, and with proper automation, you will save a lot of costs by shutting down the clusters and starting them up when needed.

Reservations

For those running stable, long-term workloads on Azure, consider Azure Reservations. Locking in a one- or three-year term can slash your computing costs by up to 72% compared to pay-as-you-go. It's a no-brainer for workloads that will only change regions or SKUs sometime soon since the discounts automatically apply to the resources you're already using.

If your Azure spend is flatline (consistent, that is) but your resource needs jazz around different SKUs and regions, the Azure Savings Plan is your go-to. Opt for a one-year or three-year term and commit to a fixed hourly spend on compute. It doesn't box you into specific resources or regions, giving you the freedom to mix things up as needed. It is ideal for setups that need flexibility without missing out on savings.

Both options are solid for dialing down costs. Choose Azure Reservations for predictable setups locked on specific resources, or swing with an Azure Savings Plan for consistent spending with more freedom in resource use.