AKS Automatic - Kubernetes without headaches

This article examines AKS Automatic, a new, managed way to run Kubernetes on Azure. If you want to simplify cluster management and reduce manual work, read on to see if AKS Automatic fits your needs.

Containers are accessible; you build the image and deploy it to an orchestrator, and from there, everything works. Right? Well, not exactly on the last part. If you're deploying your application in a solution like Azure Container Apps, then most of the management is done by Azure. Still, the reality is that not all applications are suitable for an environment like Azure Container Apps. Greenfield applications will most likely be ideal for a solution like Azure Container Apps, but when they grow, and your need for control grows with it, you will migrate to a fully blown Kubernetes deployment like AKS.

Deploying an AKS cluster is very easy. You either use the Azure Portal or CLI / Terraform, and it's up and running. However, limitations will only accrue if you're aware and careful of the bane of our existence: networking.

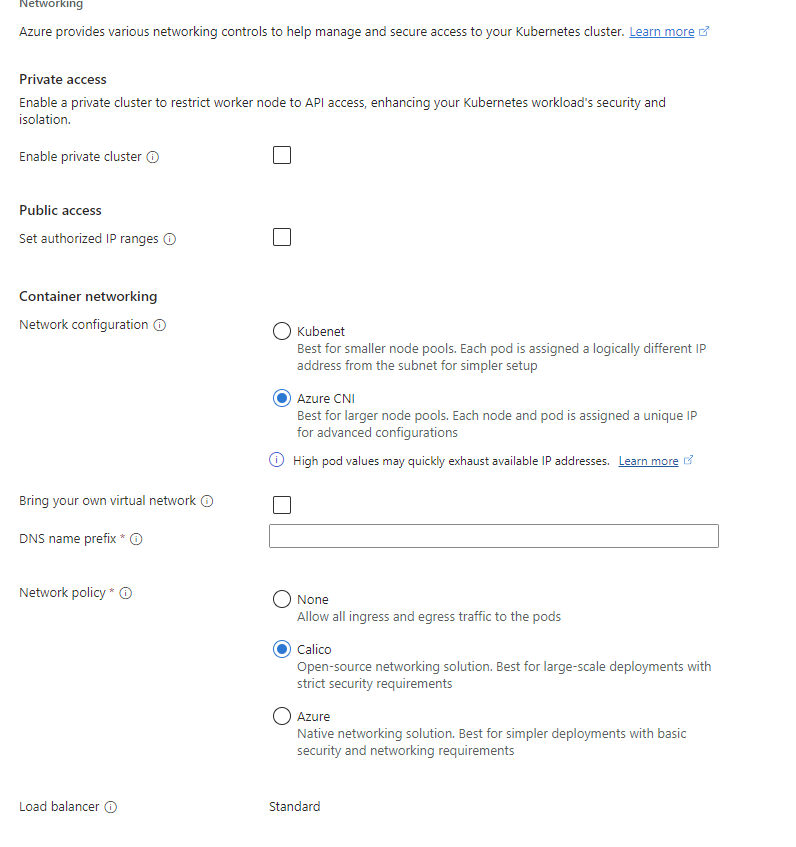

When creating an AKS cluster through the Azure Portal, the default option is Azure CNI with the Calico network plugin, which is the best in terms of simplicity and use; however, we're presented with the option of Kubenet for networking and None, Azure, and Calico for the network policies. So, what are they for, and how are they used? Are we blocked once we deploy, or can we migrate safely from them once we grow?

Let's dive into each of them and see what they are about.

Kubenet, or Kubernetes networking, was the default networking plugin in AKS, offering simplicity and efficiency in smaller or less complex deployments. It assigns IP addresses to pods from a pool, facilitating essential inter-pod communication and external connectivity through NAT. However, because of the NAT mechanism, this simplicity has limitations, particularly regarding advanced networking features like network policies and inter-node or inter-net communication. Take it like this: you have a router in your home, and your phone, PC, laptop and other home gadgets are connected via direct line or WiFi. They don't have a direct IP address; they have an IP address from a pool starting with 192.168.0.0/24; the 24 means there are 255 IP allocatable IP addresses in it, with .1 generally assigned to the router itself. The 192 range is not internet addressable, and the router does NAT to transform 192.168.0.222 to your actual ISP-assigned address, and that's how it gets to the internet. Well, it works the same way with Kubenet, where the pods have a private IP address assigned to the cluster, and nobody knows anything about them except the cluster itself.

This option was great for demos or POCs; however, they usually became production after a small timeframe, and users started having issues once they asked for more flexibility.

TLDR: Don't use Kubenet. You now know about it; avoid it as much as possible.

This option became the default option with new AKS deployments since many issues started becoming apparent with Kubenet. It didn't go quite exactly like that, but that's how it played. Many people went with the default option, and later, people started having too many issues and got into the redeploy hassle.

Azure CNI was marketed for scenarios requiring advanced networking capabilities. It presented a comprehensive solution that integrated tightly with Azure's Virtual Network (VNet) and allowed pods to be assigned unique IP addresses from the subnet, promoting simple and seamless interconnectivity and direct access to resources within and outside Azure.

When you had no idea what advanced network capabilities mean, and IP per pod assignment sounded like an overkill solution, you would likely not have chosen this option, as it might have required a ton of configuration before the application went live. The reality was that if deployed and left alone, it didn't cause any issues; it just worked, but you could tinker with it to make it more tailored to your needs.

Going with the defaults might be fine in the short run, but in the long run, when the application or applications start growing larger, the actual VNET constraints will cause issues. Imagine if the cluster is deployed in a VNET and allocates IPs from a /24 subnet. What's the maximum number of pods you can deploy? If you're going to say 255, then the answer is wrong; it's less than that. Remember Azure reserves IPs .1/.2/.3 for networking purposes, and the cluster reserves ./4, so the maximum number remaining is 250; However, AKS is not just one resource that sucks up one IP address; it has its pods on it that make the magic happen, so the actual number is even lower than that.

The implications are much larger than I described, but you get the point. Plan for larger deployments at the start; you will thank me later. Also, by being on this network plugin, you can pivot to other options without an entire cluster redeploy.

We covered Kubenet and Azure CNI; now, with CNI, we have a subset of plugins available. This is unavailable from the Azure portal, so the end user doesn't get confused or enter an analysis paralysis mode about which is better.

In this part, we enter the realm of the CLI, where we comfortably write code to deploy workloads.

Azure CNI Overlay for AKS rids the need for extensive IP address planning by allowing pods to use IP addresses from a private CIDR, distinct from the Azure VNet subnet hosting the nodes. This model facilitates efficient scaling by conserving VNet IP addresses and supports large cluster sizes without the complexities of address exhaustion. Overlay networking simplifies configuration and maintains performance on par with VMs in a VNet, making it an ideal choice for scalability. However, if you think this sounds like Kubenet, you're right. It's a more robust Kubenet but without the problems that Kubenet brings.

In this model, Kubernetes nodes are assigned IP addresses from the subnet associated with the AKS cluster. This approach ensures that each node can communicate with the broader network and other nodes within the cluster.

Overlay networking takes a different approach for pods. Instead of drawing from the same subnet as the nodes, pods receive IP addresses from a designated, private CIDR block specified when the cluster is created. This separation of IP spaces helps in several ways. For example, it conserves the subnet IP addresses, simplifies the routing of internal pod-to-pod communication, and enhances overall security by isolating the pod network from the node network.

Each node in the cluster is allocated a /24 address space from this private CIDR, ensuring that each node has enough IP addresses to assign to pods as they are created and destroyed in response to the workload's demands.

For pod networking, a distinct routing domain within the Azure Network stack allows pods to communicate directly through a private CIDR, eliminating the need for custom routes or traffic tunnelling. This setup mirrors VM performance in VNets, maintaining seamless pod connectivity without the workloads being aware of any network address manipulation, enhancing efficiency and simplicity in network management.

Azure AKS uses the node IP and NAT for external cluster communications, such as with on-premises or peered VNets. The Azure CNI translates pod traffic's source IP to the VM's primary IP, allowing the Azure Network to route traffic effectively. Direct connections to pods from outside aren't possible; pod applications must be published as Kubernetes Load Balancer services to be accessible on the VNet.

New deployment and upgrading a deployment.

New deployments are easy, while upgrades are more complicated. The main idea is that it's possible to do it; however, read carefully the Azure docs on the potential limitations. I usually write everything in PowerShell but when you're working with AKS, I suggest using the AZCli, you will get more bang for your buck as the CLI is better maintained that the PowerShell module.

#create a new cluster with overlay

$CLUSTER_NAME = "<clustername>"

$RESOURCE_GROUP = "<resourcegroup>"

$LOCATION = "<location>"

az aks create -n $CLUSTER_NAME -g $RESOURCE_GROUP --location $LOCATION --network-plugin azure --network-plugin-mode overlay --pod-cidr 192.168.0.0/16Upgrading an existing cluster is a simple command, as you can see below. However, if you have Windows nodes, Overlay is not for you, and if you have custom routes, it's a bit worse as it requires more planning.

#upgrade a cluster with AzCNI

$CLUSTER_NAME = "<clustername>"

$RESOURCE_GROUP = "<resourcegroup>"

$LOCATION = "<location>"

az aks update -n $CLUSTER_NAME -g $RESOURCE_GROUP --network-plugin-mode overlay --pod-cidr 192.168.0.0/16#ditch kubenet and go to AzCNI with overlay

$CLUSTER_NAME = "<clustername>"

$RESOURCE_GROUP = "<resourcegroup>"

$LOCATION = "<location>"

az aks update -n $CLUSTER_NAME -g $RESOURCE_GROUP --network-plugin azure --network-plugin-mode overlay Azure CNI's dynamic IP allocation addresses the IP exhaustion problem by allowing pods to receive IP addresses from a designated subnet separate from the one used for node IPs. This separation significantly enhances the scalability and flexibility of deployments, enabling better optimization of the network architecture.

One of the benefits of this model is its support for large-scale deployments without the fear of IP exhaustion within the cluster's subnet. By dynamically allocating IP addresses to pods from a separate pool, AKS can support more pods per cluster, making it an ideal choice for enterprise-level applications that demand high scalability.

Moreover, this approach offers improved network performance by facilitating direct pod-to-pod communication, bypassing the need for NAT (Network Address Translation), which can introduce latency. It also allows for more granular control over network policies and security, providing administrators with the tools to enforce strict traffic rules and isolate workloads.

The downside is that this option has no upgrade path; you need to plan ahead or redeploy your clusters to use this option. The main benefits are clear, and if you know or even have a gut feeling that this might happen, do the planning and go with this option, as it will save you the hassle of explaining why everything fails at a specific scale.

This is a more complex deployment, so adding snippets would be pointless. I suggest checking the Azure Docs for this one. Configure Azure CNI networking for dynamic allocation of IPs and enhanced subnet support - Azure Kubernetes Service | Microsoft Learn

Integrating AzCNI with Cilium simplifies and secures network operations using eBPF technology. This approach is beneficial for large and complex deployments, as it uses the advanced capabilities of eBPF to process packets more efficiently, reducing latency and increasing network throughput. The solution also directly improves networking functions, such as load balancing and policy enforcement, within the Linux kernel, enhancing performance and security.

What's this eBPF? Quota from Tigera:

Going back, Cilium provides detailed policy control and network visibility, offering precise management over traffic flow and security policies, which are essential for modern applications. However, this solution primarily supports Linux nodes and may have a learning curve due to its complexity and nuanced management.

I would say go to their website, as they explain it much better :) Cilium - Cloud Native, eBPF-based Networking, Observability, and Security

A TLDR would be:

<Start quote>

"By making use of eBPF programs loaded into the Linux kernel and a more efficient API object structure, Azure CNI Powered by Cilium provides the following benefits:

What's the catch? There is no catch. Well, Windows is not supported, but I wouldn't mind that much after that caveat. The best thing is that upgrading to Cilium is possible if you have a cluster deployed with CNI Overlay or CNI dynamic, so you won't have to plan a redeploy.

Upgrading is like this:

#cilium upgrade, very complicated command

$CLUSTER_NAME = "<clustername>"

$RESOURCE_GROUP = "<resourcegroup>"

$LOCATION = "<location>"

az aks update -n $CLUSTER_NAME -g $RESOURCE_GROUP --network-dataplane cilium

Read the docs beforehand ok? Configure Azure CNI Powered by Cilium in Azure Kubernetes Service (AKS) - Azure Kubernetes Service | Microsoft Learn

If we would provide a TLDR of this article, it would sound something like this: Containers can simplify deployment, but only some applications suit some environments. Azure Container Apps are ideal for new and smaller applications but may not be the best option for larger and more complex ones. As needs evolve, a move to a Kubernetes solution like AKS may be necessary for more control.

Deploying AKS is straightforward and can be done via the Azure Portal or CLI/Terraform. However, networking can complicate things, and Azure CNI and Calico are often recommended over Kubenet due to their simplicity and efficiency.

For larger or more complex applications, especially those requiring detailed network policies or extensive interconnectivity, Azure CNI is the preferred option. It provides unique IP addresses for pods within Azure's Virtual Network, making network management and direct resource access easier. While this choice may seem overwhelming initially, it pays off in scalability and network performance as applications grow.

Additionally, Azure CNI supports dynamic IP allocation and integrates with Cilium, powered by eBPF technology, to enhance network operations further.

These options introduce some complexity and considerations but offer scalable, efficient, and secure networking solutions for Kubernetes deployments in Azure, providing a solid foundation for growth and adaptation to evolving application needs.

So, what should I pick? Start small (not kubenet) and grow from there. Treat networking like a newborn; it initially requires a lot of care, but it will reward you in the long run. Treat it poorly, and a lot of incidents will happen :)

That being said, as always, have a good one!