AKS Automatic - Kubernetes without headaches

This article examines AKS Automatic, a new, managed way to run Kubernetes on Azure. If you want to simplify cluster management and reduce manual work, read on to see if AKS Automatic fits your needs.

Three years ago, I built a system that cleans up resource groups that pass a certain expiration date. While extensively testing the system and seeing it pass all smoke and E2E tests, I considered it ready for production, but I had a feeling that I missed something, so I left it running over the weekend. Monday morning, I woke up, and I started the battery of tests, which now started failing very interestingly. Those failures were unexpected as they suggested that the cluster where the system was running stopped existing, which was not correct as that management plane was excluded from cleanup. You should have seen my reaction when I saw the resource group existed, but the MC_ group was deleted. I laugh, thinking that this is such a great system; it deletes everything, and then it self-destructs.

The problem was that while I added an exclusion for the management plane, I never accounted for the MC_ groups to be excluded by default, as they get deleted when the AKS resource gets deleted. Well, at least I had it terraformed, so it was 10 minutes away from getting back on track, so I said. I discovered my DR procedure was faulty, but that's a different topic.

Anyway, the deletion of the MC_ group was my fault, but why did I have the possibility of actually deleting it? Azure OpenShift clusters cannot delete their MC_ group, so why is this possible for regular AKS clusters?

Anyway, I fixed the bug by searching for an event tied to that operation and went ahead with my li, but I think this isn't good and should be fixed. So, imagine how happy I was when Azure introduced the Node Resource Group Lockdown feature.

If you've worked with AKS for any time, you're familiar with the node resource group. This is where Azure deploys all the infrastructure resources needed for your Kubernetes cluster to function – virtual machines, network interfaces, load balancers, and more. While it might be tempting to directly modify these resources when you need to make changes, doing so can lead to a world of pain.

The problem is that AKS expects to manage these resources through the Kubernetes API. When you make direct changes, you create a mismatch between what Kubernetes thinks the state of the world should be and what it is. This can lead to all sorts of issues – from minor inconsistencies to major cluster failures.

The Node Resource Group Lockdown feature (NRGLockdown) addresses this by applying a deny assignment that prevents users from directly modifying resources in the node resource group. Instead, it forces all changes to go through the proper Kubernetes APIs – exactly as they should.

Before diving in, you'll need to take care of a few prerequisites. You'll need Azure CLI version and the aks-preview extension.

First, let's install or update the aks-preview extension:

# Install the aks-preview extension if you don't have it

az extension add --name aks-preview

# If you already have it, make sure it's up to date

az extension update --name aks-previewNext, register the NRGLockdownPreview feature flag:

az feature register --namespace "Microsoft.ContainerService" --name "NRGLockdownPreview"

az feature show --namespace "Microsoft.ContainerService" --name "NRGLockdownPreview"

Once it shows as "Registered", refresh the Microsoft.ContainerService resource provider registration:

az provider register --namespace Microsoft.ContainerServiceWith these prerequisites in place, you can start using the feature.

If you're creating a new AKS cluster, enabling NRGLockdown is as simple as adding a parameter to your cluster creation command. The key parameter is --nrg-lockdown-restriction-level, which you'll set to ReadOnly.

For example:

$CLUSTER_NAME=""

$RESOURCE_GROUP=""

az aks create \

--name $CLUSTER_NAME \

--resource-group $RESOURCE_GROUP \

--nrg-lockdown-restriction-level ReadOnly \

--generate-ssh-keysThis creates a new AKS cluster with the node resource group set to read-only mode. This means users can view the resources but not modify them. This is exactly what we want—visibility without the ability to break things.

Of course, many of us already have existing AKS clusters that we'd like to protect. Fortunately, you can add NRGLockdown to existing clusters as well:

$CLUSTER_NAME=""

$RESOURCE_GROUP=""

az aks update \

--name $CLUSTER_NAME \

--resource-group $RESOURCE_GROUP \

--nrg-lockdown-restriction-level ReadOnlyWhen you enable NRGLockdown with the ReadOnly restriction level, Azure applies a deny assignment to the node resource group. This deny assignment blocks all users from making changes to the group's resources, with one important exception: the AKS service itself is still allowed to make changes.

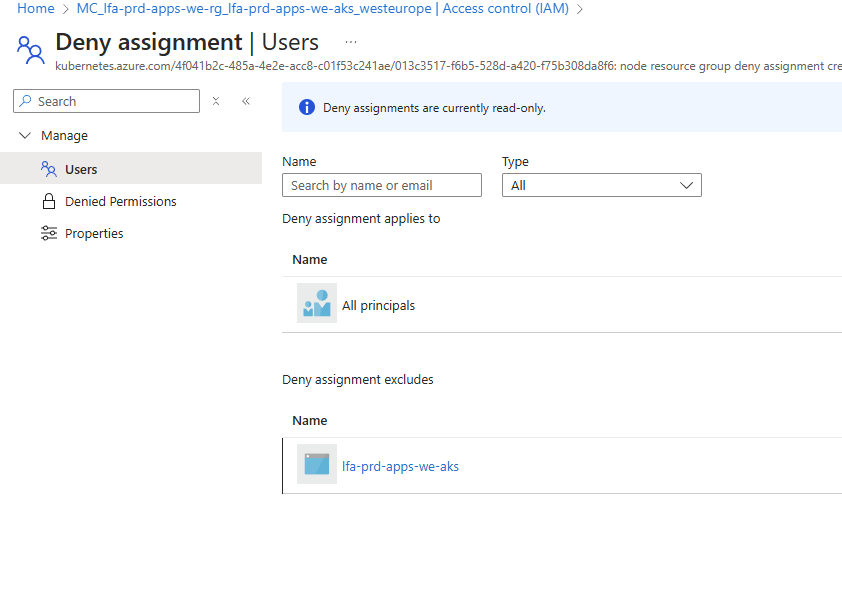

This is an important distinction. The AKS service uses a managed identity or service principal to manage the resources in the node resource group. When you enable NRGLockdown, an exclusion is added to the deny assignment for this identity, which allows the AKS service to continue functioning normally.

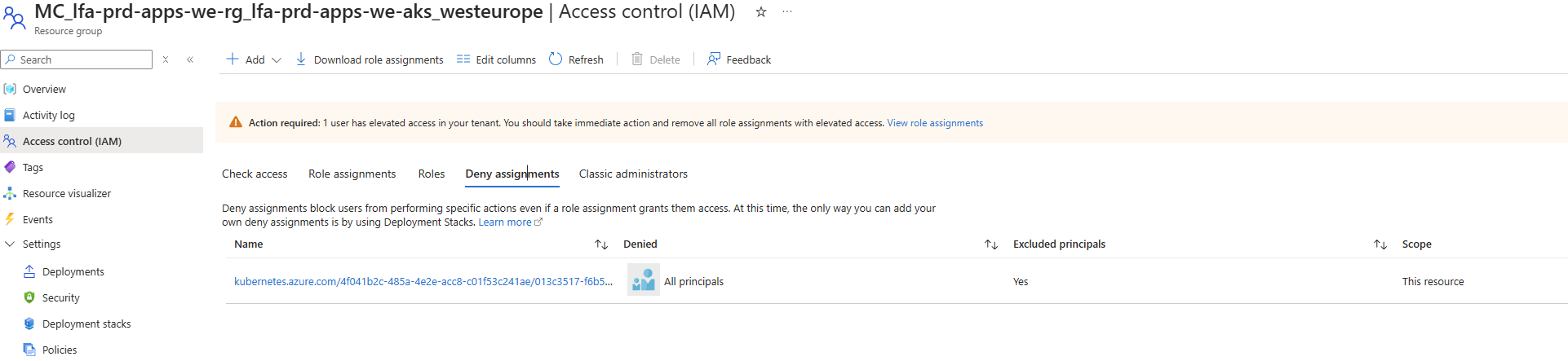

You can see this deny assignment by navigating to the node resource group in the Azure portal, clicking on "Access control (IAM)", and then selecting the "Deny assignments" tab. You'll see a deny assignment that applies to all principals except for the AKS-managed identity.

The AKS service can still provision new nodes and make other necessary changes when you scale your cluster through Kubernetes (using kubectl or another Kubernetes API client). But when someone tries to modify a load balancer, network interface, or other resource directly in the Azure portal or via the Azure CLI, they'll get an "access denied" error.

Of course, there might be situations where you need to disable NRGLockdown to perform specific administrative tasks temporarily. If that's the case, you can remove it using:

$CLUSTER_NAME=""

$RESOURCE_GROUP=""

az aks update \

--name $CLUSTER_NAME \

--resource-group $RESOURCE_GROUP \

--nrg-lockdown-restriction-level UnrestrictedThis sets the restriction level back to "Unrestricted," which allows direct modifications to the node resource group. Once you're done, remember to re-enable NRGLockdown or don't, you'll find out later when somebody tampers with the load balancer.

A common concern might be, "Will enabling NRGLockdown to break my existing automation or workflows?" The answer is no, but it depends on how you manage your AKS clusters.

If you follow industry best practices and make all changes through Kubernetes APIs (kubectl, Helm, etc.), then enabling NRGLockdown won't affect your workflows.

However, if you have scripts or procedures that directly modify Azure resources in the node resource group, they will stop working when you enable NRGLockdown. This is actually a good thing—it forces you to update those procedures to use the proper Kubernetes APIs.

The transition period is usually short and well worth the improved stability and security that NRGLockdown provides.

Node Resource Group Lockdown is a minor technical feature. However, it's a very necessary feature that prevents accidental or uninformed changes to the underlying infrastructure, reduces support issues, enhances stability, and helps ensure your clusters behave as expected.

If you manage AKS clusters and haven't enabled NRGLockdown yet even if it's still in preview, I strongly encourage you to do so. It's a simple change that can greatly impact your sleep quality. Nobody likes being woken up at 3 AM because security ran a "governance script",

Have a good one!