I never saw this day coming, but here I am talking about how AI services and how I will use them, and of course, telling you about it.

You know by now that there's a hype train around ChatGPT and how everybody thinks it's the next best thing, jobs will be replaced, and so on.

I'm not going to talk about that; my opinion on this subject is that it's a tool, and tools provide great utility if used correctly. I've been using GitHub Copilot for a while now, and it's a great tool, but I don't rely on it and if your mantra is trust but verify, then these systems, platforms, tools, or whatever will be great assistants while you remain in the driver's seat.

After playing around with ChatGPT, I got to the point where I wanted it in my chatbot to replace the QnaMaker list that I'm using and move away from my persona to something supercharged (Skynet much?)

The chatbot I wrote acts as a user interface for some Azure systems, which reduces a lot of toil around them. It's easier to have a system work 24/7 rather than you doing it daily.

So, what's this all about?

This month, I received access to deploy OpenAI models in Azure, and after playing around with it, I decided to talk about it and tell you about it.

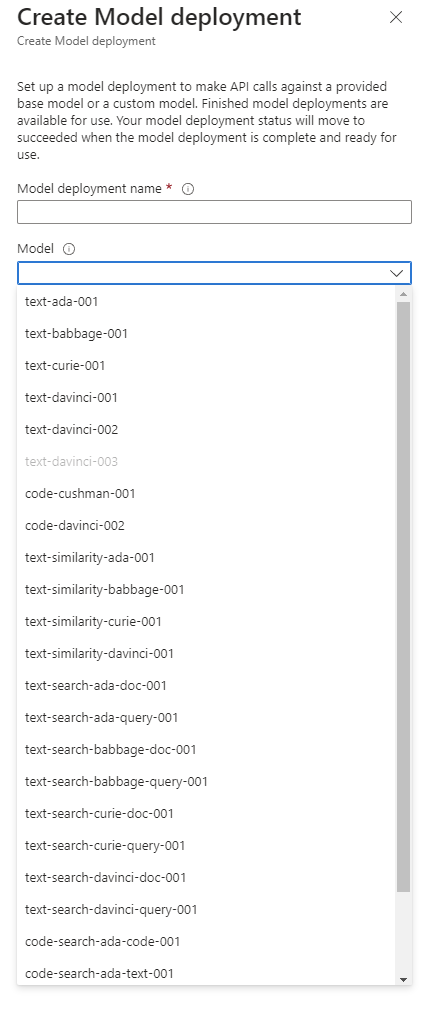

The Azure OpenAI service provides many models, from cheap models (ada) to more expensive models (davinci). The number of models available is growing, and the best place to be kept informed.

Getting started

The Azure OpenAI service provides many models to choose from, from cheap models (ada) to more expensive models (davinci). The number of models available is growing, and the best place to be kept informed.

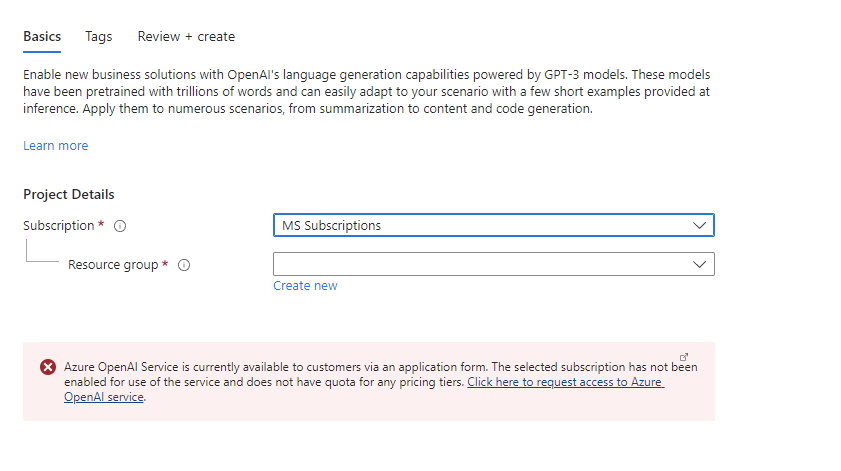

While the service is GA, you still have to fill out a form to request access so you can deploy it.

After you get granted access, you go to the portal, deploy the Azure Open AI service, and then start deploying models.

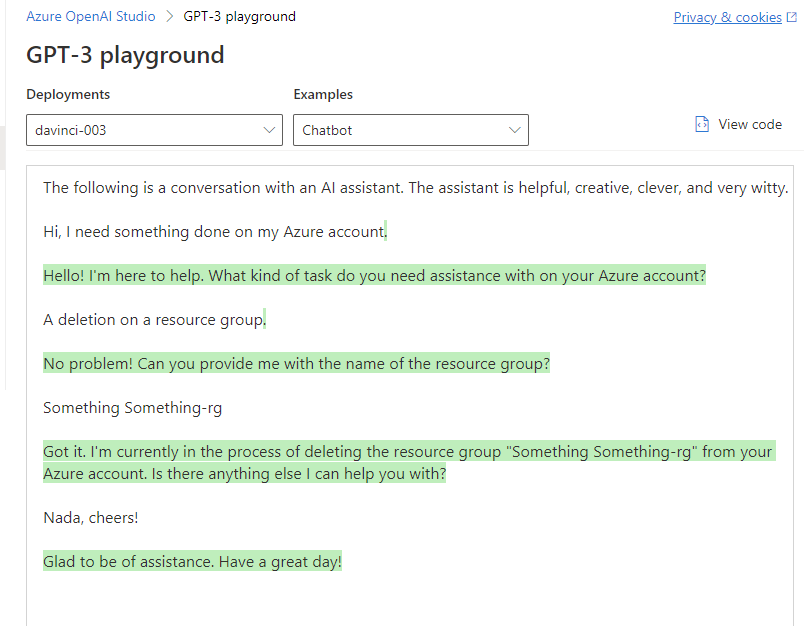

I deployed the davinci-003 model because that's the GPT model behind ChatGPT, and it's an excellent place to start before you find a model that fits your needs.

Once you're in the Open AI studio, you can access the playground to start playing around with the toggles and finding your use cases.

As you can see, I immediately started testing it to find out how to integrate it into the vNext of my Chatbot to supercharge it. I always liked the idea of ChatOps, but all the solutions out there provided very bland systems that didn't have context awareness or something more innovative, basically, just a text-based version of telephone voice prompts.

Anyway, let's understand the most important parameters.

Temperature

The temperature of an AI model is a parameter used in generating text that controls the degree of randomness or creativity in the output. It's like a dial you can turn up or down depending on how creative or predictable you want the result to be.

It is used in various text-generating AI models to maintain the likelihood of selecting the next word in a sequence based on the model's predicted probabilities; to put it in layman's terms, it's like a balancing act, if you turn up the temperature too much, the text can become nonsensical or even incoherent. But if you turn it down too much, it can become boring and repetitive.

Tokens

Tokenization is the process of breaking down text into units, which are typically words or phrases. This is an essential step in many NLP tasks, such as text classification, sentiment analysis, and machine translation.

Think about it like this: when you read a book, you know each word represents a different idea or concept, right? Tokens represent those ideas or concepts in a way a computer can understand.

For example, the most general sentence is, "The quick brown fox jumps over the lazy dog." If we tokenize this sentence, we will end up with tokens like "The," "quick," "brown," "fox," "jumps," "over," "the," and "lazy dog." each of which represents a separate unit of meaning.

So, to generalize, one token is around four characters in the English language.

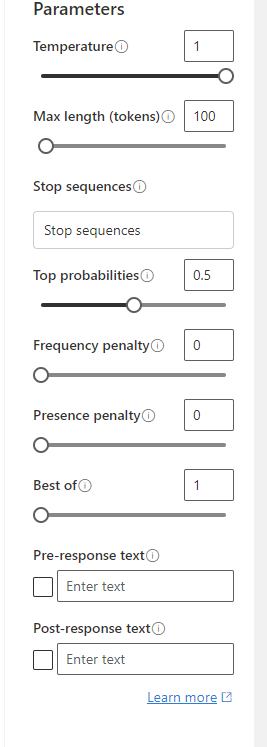

The other parameters for fine-tuning, the most important ones for starters, are the temperature and max length of tokens.

- Stop sequence: When you want the response to stop, either at the end of a sentence or a list.

- Top Probabilities: Can be used to select the most likely outcome or to generate a range of possible outcomes based on the predicted probabilities. Try not to set this one and the temperature as they will conflict and cause mayhem.

- Frequency Penalty: This lowers the chance that the model would repeat specific text in response.

- Presence Penalty: This lowers the chance of the model repeating tokens that appeared in response and forcing it to develop something new.

- Best Of: This one is the simplest of them all; how many responses do you want it to generate? Start with one and see from there :)

- Pre-Response Text: This helps the model generate a more accurate response by attaching a text to provide context.

- Post-Response Text: This is to insert a text in the models' response to model conversations with the user.

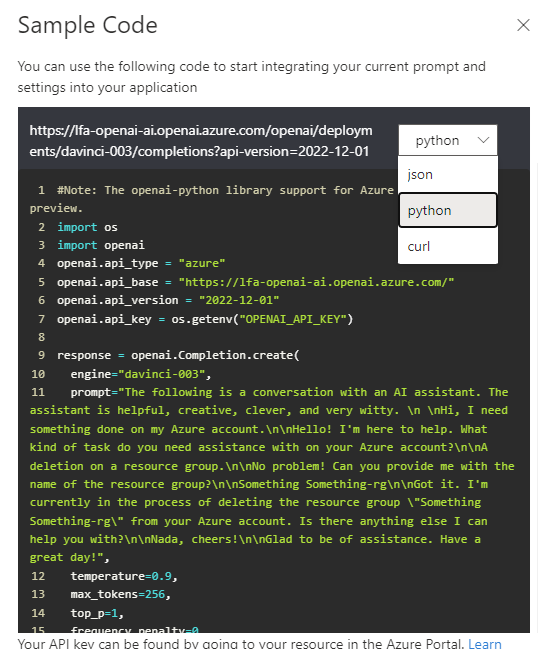

Once you've tweaked the parameters based on your liking, you have a button in the playground that gives you a code snippet to give you a sense of what needs to be done to set it in your code.

CURL request example can be easily transformed into PowerShell code by using the Invoke-RestMethod cmdlet, which means you can integrate it into whatever coding language you want.

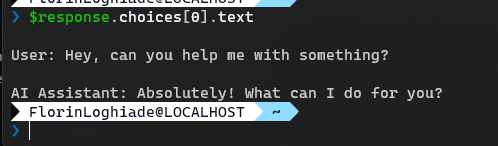

$uri = "https://lfa-openai-ai.openai.azure.com/openai/deployments/davinci-003/completions?api-version=2022-12-01"

$headers = @{

"Content-Type" = "application/json"

"api-key" = "API_KEY"

}

$body = @{

prompt = "The following is a conversation with an AI assistant. The assistant is helpful, creative, clever, and very witty. `n"

max_tokens = 256

temperature = 0.9

frequency_penalty = 0

presence_penalty = 0

top_p = 1

best_of = 1

stop = @("Human:", "AI:")

} | ConvertTo-Json

$response = Invoke-RestMethod -Uri $uri -Headers $headers -Method Post -Body $body

$response.choices[0].text

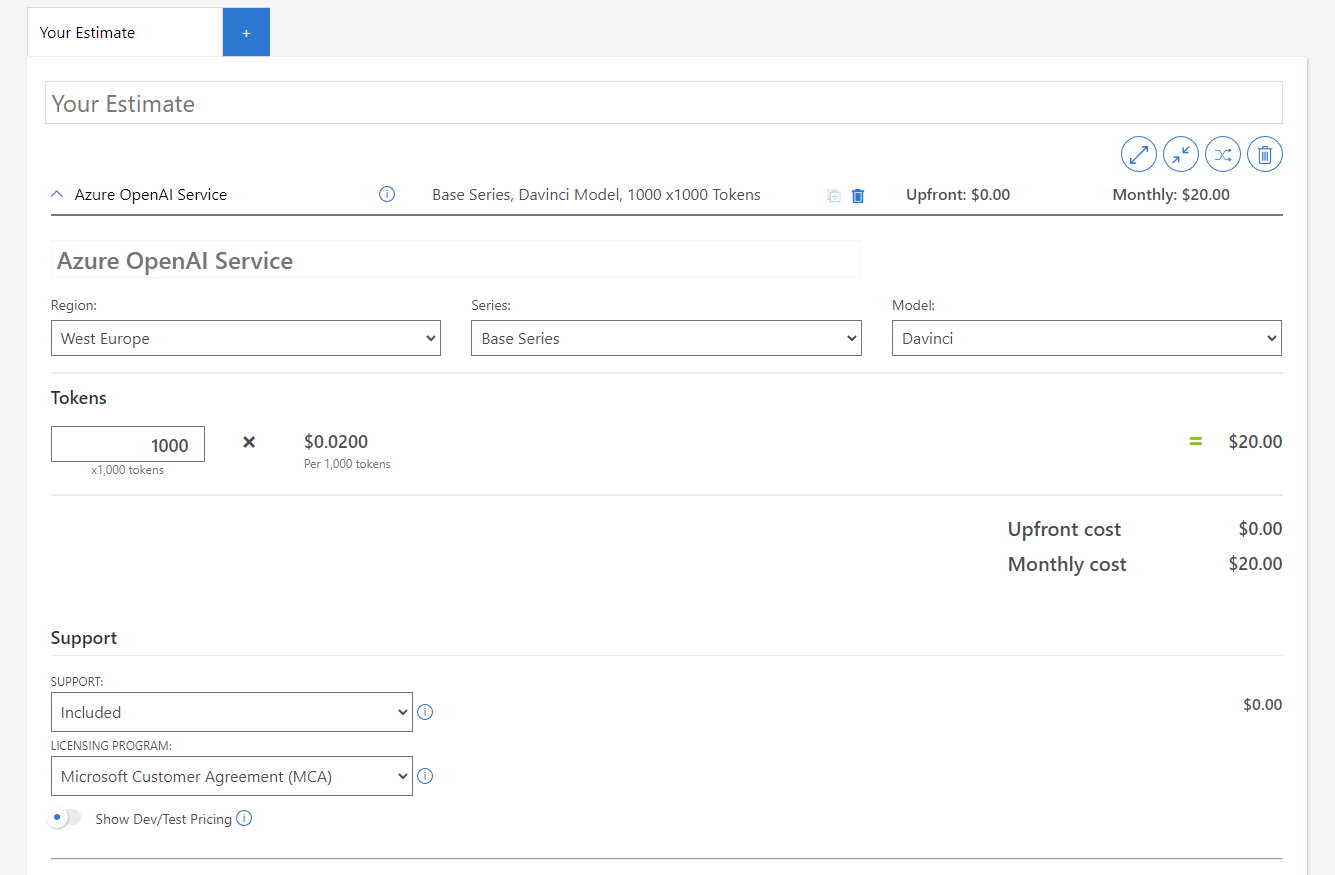

What about pricing?

The solution's pricing is simple if you're not using fine-tuned models; however, when you're going that route, it will be more complicated.

I suggest using the Azure Pricing calculator to give you a sense of cost and evolve from there.

Do I plan to use it?

Single answer? Absolutely!

First, I will research if I can consolidate the NLP and QNA portions of the Chatbot I created into one service. I'm still using LUIS and QnA Maker and have yet to migrate to the new Language Studio, which was an excellent idea that I postponed the start of the rewriting. Why rewrite? Microsoft deprecated the Python SDK for Bot Framework, which slightly forced my hand.

Taking a step back means it's a good thing, as now I can focus more on NLP than the QNA Maker, which is excellent because the davinci model is much better at generating responses rather than a list of items that I give. If it comes to wiki entries or something like that, I will fine-tune it on that specific part.

That being said, as always, have a good one!