Azure DevOps - Workload Identity Federation

In a previous blog post, I discussed Workload Identity Federation in AKS, the successor to the Azure Pod Identity solutions and a more elegant

I've written about KEDA before, but only in a little detail. A couple of weeks ago, a colleague asked me about KEDA because he saw my blog post regarding scalable jobs, and he had multiple questions that needed to be more evident from the documentation. I read the documentation on a diagonal because I'm looking for the specifics of my problem, not problem statements, use and things like that. I believe even you, who are reading this, will just dive through the content and take just what you need :)

What is KEDA?

Kubernetes Event Drive Automation: KEDA is an epic piece of software that bridges your event sources (e.g., message queues, databases)and, with Kubernetes Horizontal Pod Autoscalers (HPA), enables event-driven scaling for any containerized application within your cluster.

KEDA's Capabilities in Action:

KEDA boasts diverse features that empower developers to craft highly scalable and event-driven solutions. Let's explore some of its core capabilities:

Scalers Supported by KEDA in Azure:

KEDA extends its event-driven scaling capabilities to various event sources commonly used on Azure. Here's a breakdown of some popular options:

KEDA Scalers Documentation: Scalers | KEDA

Examples of KEDA in Action:

KEDA presents a compelling solution for achieving event-driven scalability within your clusters. Its flexibility, diverse event source support, and seamless integration make it a valuable tool for modern cloud-native applications. As you explore the vast potential of KEDA, remember to leverage the extensive documentation (even though I said, I don't read it that far)

Here are some starting points:

Benefits of Utilizing KEDA in Production:

Deployment Considerations:

While KEDA unlocks a new level of scalability, there are some key factors to consider when deploying it in production:

1. Scaling a Web Crawler with Azure Event Hub Scaler:

Here's an example that crawls a list of websites and pushes it to Event Hub:

import requests

from azure.eventhub import EventHubConsumerClient as EventHubClient, EventData

import json

connection_str = "<event-hub-connection-string>"

event_hub_name = "hubname"

def crawl_website(url):

"""Crawls a website and sends an event to the event hub."""

try:

response = requests.get(url)

response.raise_for_status()

# Process website content here (e.g., extract data)

data = {"url": url, "content": response.text}

send_event_to_event_hub(data)

except requests.exceptions.RequestException as e:

print(f"Error crawling {url}: {e}")

def send_event_to_event_hub(data):

client = EventHubClient.from_connection_string(connection_str, event_hub_name)

client.send(EventData(json.dumps(data).encode("utf-8")))

if __name__ == "__main__":

urls = ["https://google.com"]

for url in urls:

crawl_website(url)

print(f"Crawled {url}")

Build and push the image to an Azure Container Image and then use the following YAML to use it.

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-crawler

spec:

replicas: 1

selector:

matchLabels:

app: web-crawler

template:

metadata:

labels:

app: web-crawler

spec:

containers:

- name: web-crawler

image: my-web-crawler-image:latest

command: ["/bin/bash", "-c", "python web_crawler.py --event-hub-connection-string <hub-connection-string>"]

---

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: web-crawler-scaler

spec:

triggers:

- type: azureeventhub

configuration:

connectionStringFromSecret:

name: eventhub-connection-secret

key: connectionString

eventHubName: eventhub

filter: # Optional event filter

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: web-crawler

minReplicas: 1

maxReplicas: 5

Explanation:

This deployment is based on infinite loop so don't do this in production 😸

2. Processing Image Uploads with Azure Storage Queue Scaler:

Here's a code example of an image processing service:

from azure.storage.blob import BlobServiceClient

from PIL import Image

connection_str = "<storage-account-connection-string>"

account_name = "<storage-account-name>"

container_name = "images"

def process_image(blob_name):

"""Downloads, processes, and saves an image from Azure Blob Storage."""

blob_service_client = BlobServiceClient.from_connection_string(connection_str)

blob_client = blob_service_client.get_container_client(

container_name

).get_blob_client(blob_name)

# Download image data

with open(f"/tmp/{blob_name}", "wb") as download_file:

download_file.write(blob_client.download_blob().readall())

try:

img = Image.open(f"/tmp/{blob_name}")

resized_img = img.resize((256, 256))

resized_img.save(f"/tmp/processed_{blob_name}")

except Exception as e:

print(f"Error processing image {blob_name}: {e}")

if __name__ == "__main__":

blob_service_client = BlobServiceClient.from_connection_string(connection_str)

blob_client = blob_service_client.get_container_client(container_name)

blob_list = list(blob_client.list_blobs())

for blob in blob_list:

process_image(blob.name)

Build and push the image to an Azure Container Image and then use the following YAML to use it.

apiVersion: apps/v1

kind: Deployment

metadata:

name: image-processor

spec:

replicas: 1

selector:

matchLabels:

app: image-processor

template:

metadata:

labels:

app: image-processor

spec:

containers:

- name: image-processor

image: my-image-processor-image:latest

command: ["/bin/bash", "-c", "python image_processor.py --storage-account-name <storage-account-name> --queue-name image-uploads"]

---

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: image-processor-scaler

spec:

triggers:

- type: azurestoragequeue

configuration:

storageConnectionStringFromSecret:

name: storage-connection-secret

key: connectionString

queueName: image-uploads

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: image-processor

minReplicas: 1

maxReplicas: 3

Explanation:

Here's another example showing the functionality of KEDA ScaledObject utilizing a ScaledJob for processing tasks triggered by events:

import sys

import json

def process_task(task_data):

print(f"Processing task: {task_data}")

if __name__ == "__main__":

if len(sys.argv) > 1:

task_data = json.loads(sys.argv[1])

process_task(task_data)

else:

print("Error: Missing task data")

Build and push the image to an Azure Container Image and then use the following YAML to use it.

apiVersion: apps/v1

kind: Deployment

metadata:

name: task-processor

spec:

replicas: 1

selector:

matchLabels:

app: task-processor

template:

metadata:

labels:

app: task-processor

spec:

containers:

- name: task-processor

image: my-task-processor-image:latest

command: ["/bin/bash", "-c", "python task_processor.py"]

---

apiVersion: keda.sh/v1alpha1

kind: ScaledJob

metadata:

name: task-processor-job

spec:

jobSpec:

template:

spec:

containers:

- name: task-processor

image: my-task-processor-image:latest

command: ["/bin/bash", "-c", "python task_processor.py --process-event $KEDA_TASK_DATA"]

restartPolicy: Never

triggers:

- type: azurestoragequeue

configuration:

storageConnectionStringFromSecret:

name: storage-connection-secret

key: connectionString

queueName: tasks

scaleTargetRef:

apiVersion: batch/v1

kind: Job

name: task-processor-job

Explanation:

$KEDA_TASK_DATA which will be populated by KEDA with the event data from the queue.restartPolicy is set to Never as each task is intended to be processed only once.azurestoragequeue type, similar to the previous example.Key Differences from ScaledObject:

$KEDA_TASK_DATA environment variable injected by KEDA and processes it within the process_task function.Never restart policy. KEDA scales the number of Jobs based on the backlog of events in the queue.I use KEDA every day, and every time, I'm happy that I found it ages ago. Recently, I had to create a processing flow that processes five million or more events per minute when a spike happens, and without problems, KEDA solved scaling out and in when the load required it.

The use cases are very vast with the system and I recommend experimenting with it. Have it installed in a cluster and play around with it. But be warned that if you have the cluster autoscaler on, KEDA will trigger it if you need to be careful.

That's it, folks, have a good one!

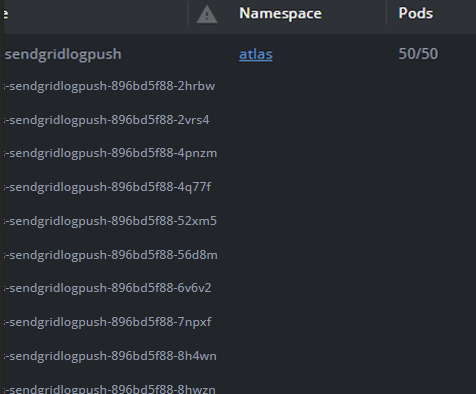

P.S. Keda chugging in production: